Customers work together with providers in real-time. They login to web sites, like and share posts, buy items and even converse, all in real-time. So why is it that every time you might have an issue when utilizing a service and also you attain a buyer assist consultant, that they by no means appear to know who you’re or what you’ve been doing not too long ago?

That is doubtless as a result of they haven’t constructed a buyer 360 profile and if they’ve, it actually isn’t real-time. Right here, real-time means throughout the final couple of minutes if not seconds. Understanding all the things the client has simply finished previous to contacting buyer assist provides the staff the most effective probability to know and clear up the client’s downside.

That is why a real-time Buyer 360 profile is extra helpful than a batch, knowledge warehouse generated profile, as I wish to hold the latency low, which isn’t possible with a conventional knowledge warehouse. On this submit, I’ll stroll by means of what a buyer 360 profile is and the best way to construct one which updates in real-time.

What Is a Buyer 360 Profile?

The aim of a buyer 360 profile is to offer a holistic view of a buyer. The objective is to take knowledge from all of the disparate providers that the client interacts with, regarding a services or products. This knowledge is introduced collectively, aggregated after which usually displayed by way of a dashboard to be used by the client assist staff.

When a buyer calls the assist staff or makes use of on-line chat, the staff can rapidly perceive who the client is and what they’ve finished not too long ago. This removes the necessity for the tedious, script-read questions, which means the staff can get straight to fixing the issue.

With this knowledge multi function place, it will possibly then be used downstream for predictive analytics and real-time segmentation. This can be utilized to offer extra well timed and related advertising and front-end personalisation, enhancing the client expertise.

Use Case: Vogue Retail Retailer

To assist display the ability of a buyer 360, I’ll be utilizing a nationwide Vogue Retail Model for instance. This model has a number of shops throughout the nation and a web site permitting clients to order gadgets for supply or retailer choose up.

The model has a buyer assist centre that offers with buyer enquiries about orders, deliveries, returns and fraud. What they want is a buyer 360 dashboard in order that when a buyer contacts them with a difficulty, they will see the most recent buyer particulars and exercise in real-time.

The info sources out there embody:

- customers (MongoDB): Core buyer knowledge resembling identify, age, gender, deal with.

- online_orders (MongoDB): On-line buy knowledge together with product particulars and supply addresses.

- instore_orders (MongoDB): In-store buy knowledge once more together with product particulars and retailer location.

- marketing_emails (Kafka): Advertising and marketing knowledge together with gadgets despatched and any interactions.

- weblogs (Kafka): Web site analytics resembling logins, searching, purchases and any errors.

In the remainder of the submit, utilizing these knowledge sources, I’ll present you the best way to consolidate all of this knowledge into one place in real-time and floor it to a dashboard.

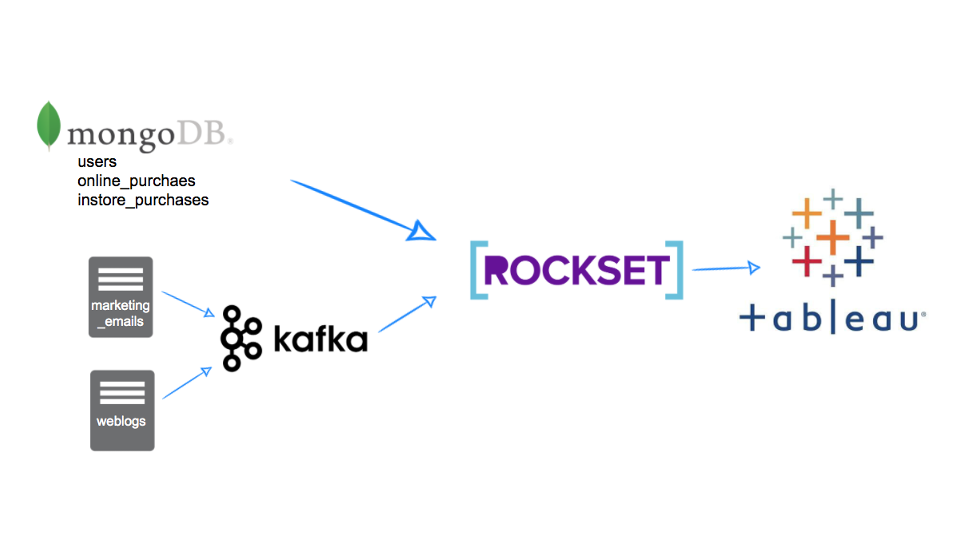

Platform Structure

Step one in constructing a buyer 360 is consolidating the completely different knowledge sources into one place. Because of the real-time necessities of our vogue model, we want a technique to hold the info sources in sync in real-time and likewise permit speedy retrieval and analytics on this knowledge so it may be introduced again in a dashboard.

For this, we’ll use Rockset. The shopper and buy knowledge is at present saved in a MongoDB database which may merely be replicated into Rockset utilizing a built-in connector. To get the weblogs and advertising knowledge I’ll use Kafka, as Rockset can then eat these messages as they’re generated.

What we’re left with is a platform structure as proven in Fig 1. With knowledge flowing by means of to Rockset in real-time, we are able to then show the client 360 dashboards utilizing Tableau. This strategy permits us to see buyer interactions in the previous couple of minutes and even seconds on our dashboard. A conventional knowledge warehouse strategy would considerably improve this latency as a result of batch knowledge pipelines ingesting the info. Rockset can preserve knowledge latency within the 1-2 second vary when connecting to an OLTP database or to knowledge streams.

Fig 1. Platform structure diagram

I’ve written posts on integrating Kafka subjects into Rockset and likewise utilising the MongoDB connector that go into extra element on the best way to set these integrations up. On this submit, I’m going to pay attention extra on the analytical queries and dashboard constructing and assume this knowledge is already being synced into Rockset.

Constructing the Dashboard with Tableau

The very first thing we have to do is get Tableau speaking to Rockset utilizing the Rockset documentation. That is pretty simple and solely requires downloading a JDBC connector and placing it within the right folder, then producing an API key inside your Rockset console that shall be used to attach in Tableau.

As soon as finished, we are able to now work on constructing our SQL statements to offer us with all the info we want for our Dashboard. I like to recommend constructing this within the Rockset console and transferring it over to Tableau in a while. This can give us higher management over the statements which are submitted to Rockset for higher efficiency.

First, let’s break down what we wish our dashboard to indicate:

- Fundamental buyer particulars together with first identify, final identify

- Buy stats together with variety of on-line and in-store purchases, hottest gadgets purchased and quantity spent all time

- Latest exercise stats together with final buy dates final login, final web site go to and final electronic mail interplay

- Particulars about most up-to-date errors while searching on-line

Now we are able to work on the SQL for bringing all of those properties collectively.

1. Fundamental Buyer Particulars

This one is straightforward, only a easy SELECT from the customers assortment (replicated from MongoDB).

SELECT customers.id as customer_id,

customers.first_name,

customers.last_name,

customers.gender,

DATE_DIFF('yr',CAST(dob as date), CURRENT_DATE()) as age

FROM vogue.customers

2. Buy Statistics

First, we wish to get all the online_purchases statistics. Once more, this knowledge has been replicated by Rockset’s MongoDB integration. Merely counting the variety of orders and variety of gadgets and likewise dividing one by the opposite to get an thought of things per order.

SELECT *

FROM (SELECT 'On-line' AS "sort",

on-line.user_id AS customer_id,

COUNT(DISTINCT ON line._id) AS number_of_online_orders,

COUNT(*) AS number_of_items_purchased,

COUNT(*) / COUNT(DISTINCT ON line._id) AS items_per_order

FROM vogue.online_orders on-line,

UNNEST(on-line."gadgets")

GROUP BY 1,

2) on-line

UNION ALL

(SELECT 'Instore' AS "sort",

instore.user_id AS customer_id,

COUNT(DISTINCT instore._id) AS number_of_instore_orders,

COUNT(*) AS number_of_items_purchased,

COUNT(*) / COUNT(DISTINCT instore._id) AS items_per_order

FROM vogue.instore_orders instore,

UNNEST(instore."gadgets")

GROUP BY 1,

2)

We will then replicate this for the instore_orders and union the 2 statements collectively.

3. Most Well-liked Objects

We now wish to perceive the preferred gadgets bought by every person. This one merely calculates a depend of merchandise by person. To do that we have to unnest the gadgets, this provides us one row per order merchandise prepared for counting.

SELECT online_orders.user_id AS "Buyer ID",

UPPER(basket.product_name) AS "Product Identify",

COUNT(*) AS "Purchases"

FROM vogue.online_orders,

UNNEST(online_orders."gadgets") AS basket

GROUP BY 1,

2

4. Latest Exercise

For this, we are going to use all tables and get the final time the person did something on the platform. This encompasses the customers, instore_orders and online_orders knowledge sources from MongoDB alongside the weblogs and marketing_emails knowledge streamed in from Kafka. A barely longer question as we’re getting the max date for every occasion sort and unioning them collectively, however as soon as in Rockset it’s trivial to mix these knowledge units.

SELECT occasion,

user_id AS customer_id,

"date"

FROM (SELECT 'Instore Order' AS occasion,

user_id,

CAST(MAX(DATE) AS datetime) "date"

FROM vogue.instore_orders

GROUP BY 1,

2) x

UNION

(SELECT 'On-line Order' AS occasion,

user_id,

CAST(MAX(DATE) AS datetime) last_online_purchase_date

FROM vogue.online_orders

GROUP BY 1,

2)

UNION

(SELECT 'E-mail Despatched' AS occasion,

user_id,

CAST(MAX(DATE) AS datetime) AS last_email_date

FROM vogue.marketing_emails

GROUP BY 1,

2)

UNION

(SELECT 'E-mail Opened' AS occasion,

user_id,

CAST(MAX(CASE WHEN email_opened THEN DATE ELSE NULL END) AS datetime) AS last_email_opened_date

FROM vogue.marketing_emails

GROUP BY 1,

2)

UNION

(SELECT 'E-mail Clicked' AS occasion,

user_id,

CAST(MAX(CASE WHEN email_clicked THEN DATE ELSE NULL END) AS datetime) AS last_email_clicked_date

FROM vogue.marketing_emails

GROUP BY 1,

2)

UNION

(SELECT 'Web site Go to' AS occasion,

user_id,

CAST(MAX(DATE) AS datetime) AS last_website_visit_date

FROM vogue.weblogs

GROUP BY 1,

2)

UNION

(SELECT 'Web site Login' AS occasion,

user_id,

CAST(MAX(CASE WHEN weblogs.web page="login_success.html" THEN DATE ELSE NULL END) AS datetime) AS last_website_login_date

FROM vogue.weblogs

GROUP BY 1,

2)

5. Latest Errors

One other easy question to get the web page the person was on, the error message and the final time it occurred utilizing the weblogs dataset from Kafka.

SELECT customers.id AS "Buyer ID",

weblogs.error_message AS "Error Message",

weblogs.web page AS "Web page Identify",

MAX(weblogs.date) AS "Date"

FROM vogue.customers

LEFT JOIN vogue.weblogs ON weblogs.user_id = customers.id

WHERE weblogs.error_message IS NOT NULL

GROUP BY 1,

2,

3

Making a Dashboard

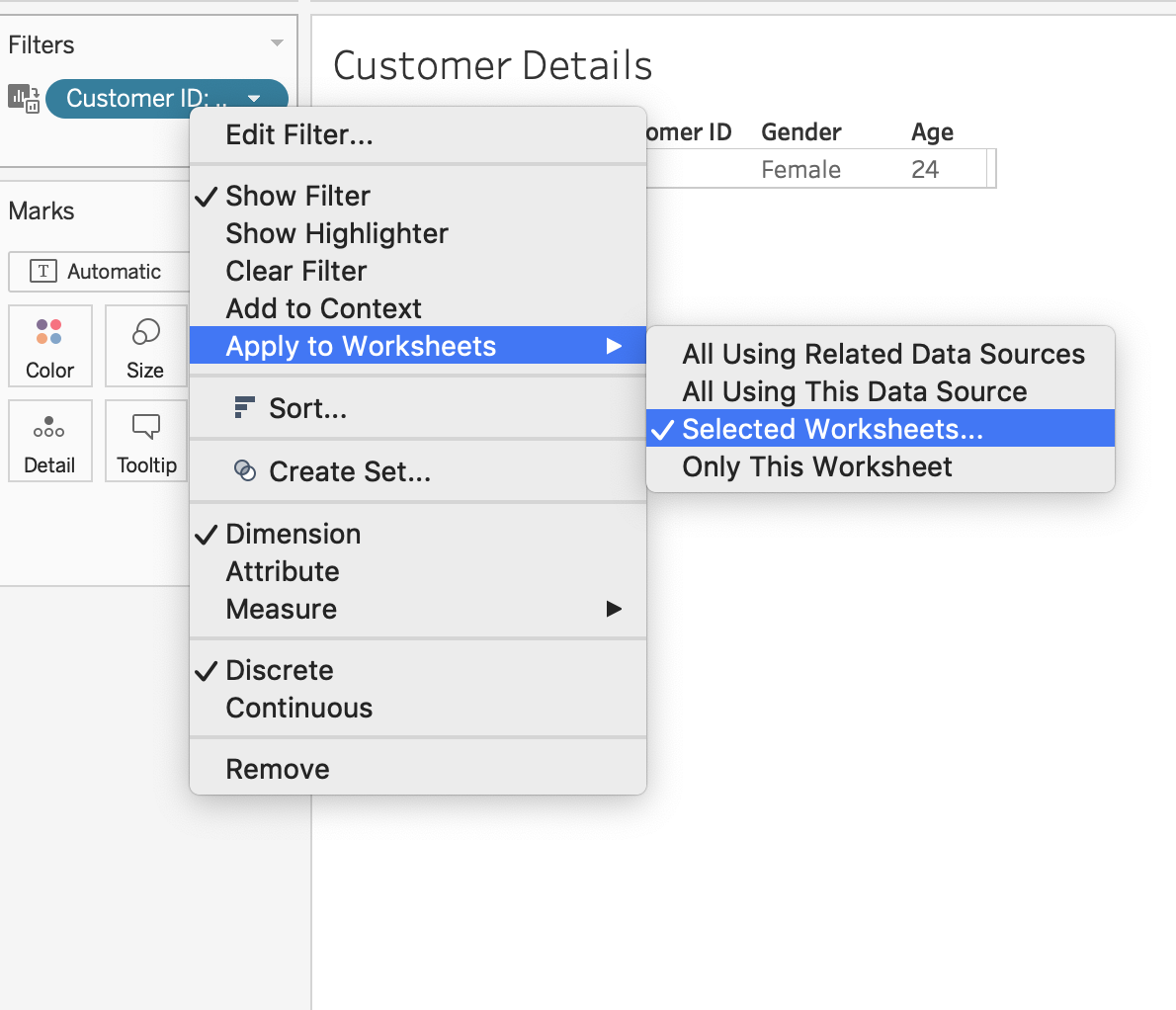

Now we wish to pull all of those SQL queries right into a Tableau workbook. I discover it greatest to create an information supply and worksheet per part after which create a dashboard to tie all of them collectively.

In Tableau, I constructed 6 worksheets, one for every of the SQL statements above. The worksheets every show the info merely and intuitively. The thought is that these 6 worksheets can then be mixed right into a dashboard that permits the customer support member to seek for a buyer and show a 360 view.

To do that in Tableau, we want the filtering column to have the identical identify throughout all of the sheets; I referred to as mine “Buyer ID”. You may then right-click on the filter and apply to chose worksheets as proven in Fig 2.

Fig 2. Making use of a filter to a number of worksheets in Tableau

This can carry up a listing of all worksheets that Tableau can apply this identical filter to. This shall be useful when constructing our dashboard as we solely want to incorporate one search filter that can then be utilized to all of the worksheets. You should identify the sector the identical throughout all of your worksheets for this to work.

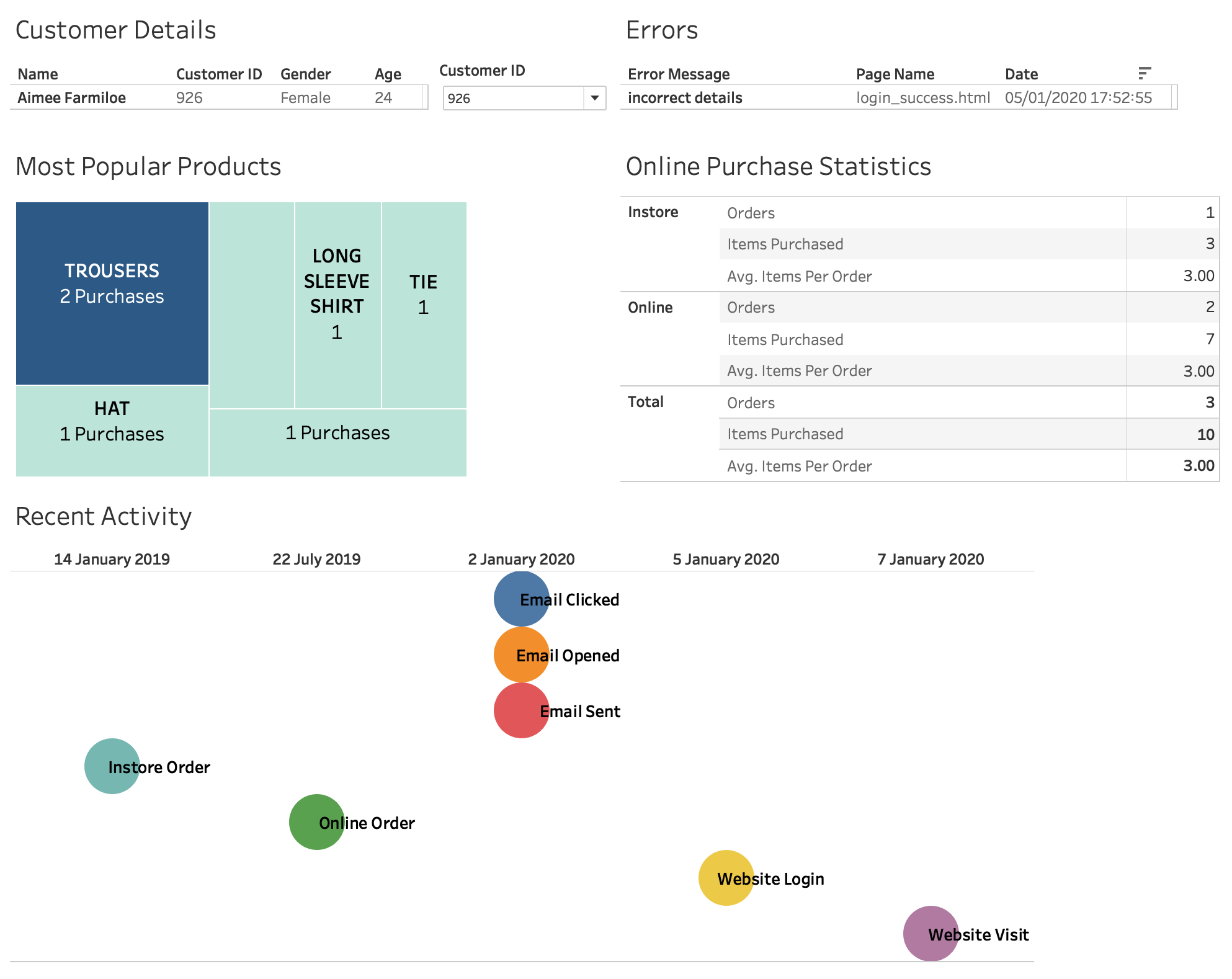

Fig 3 reveals all the worksheets put collectively in a easy dashboard. All the knowledge inside this dashboard is backed by Rockset and subsequently reaps all of its advantages. That is why it’s essential to make use of the SQL statements straight in Tableau slightly than creating inner Tableau knowledge sources. In doing this, we ask Rockset to carry out the complicated analytics, which means the info could be crunched effectively. It additionally signifies that any new knowledge that’s synced into Rockset is made out there in real-time.

Fig 3. Tableau buyer 360 dashboard

If a buyer contacts assist with a question, their newest exercise is straight away out there on this dashboard, together with their newest error message, buy historical past and electronic mail exercise. This enables the customer support member to know the client at a look and get straight to resolving their question, as an alternative of asking questions that they need to already know the reply to.

The dashboard provides an summary of the client’s particulars within the high left and any latest errors within the high proper. In between is the filter/search functionality to pick out a buyer based mostly on who is looking. The following part provides an at-a-glance view of the preferred merchandise bought by the client and their lifetime buy statistics. The ultimate part reveals an exercise timeline exhibiting the latest interactions with the service throughout electronic mail, in-store and on-line channels.

Additional Potential

Constructing a buyer 360 profile doesn’t should cease at dashboards. Now you might have knowledge flowing right into a single analytics platform, this identical knowledge can be utilized to enhance buyer entrance finish expertise, present cohesive messaging throughout net, cellular and advertising and for predictive modelling.

Rocket’s in-built API means this knowledge could be made accessible to the entrance finish. The web site can then use these profiles to personalise the entrance finish content material. For instance, a buyer’s favorite merchandise can be utilized to show these merchandise entrance and centre on the web site. This requires much less effort from the client, because it’s now doubtless that what they got here to your web site for is correct there on the primary web page.

The advertising system can use this knowledge to make sure that emails are personalised in the identical manner. Meaning the client visits the web site and sees really useful merchandise that in addition they see in an electronic mail a couple of days later. This not solely personalises their expertise however ensures it is cohesive throughout all channels.

Lastly, this knowledge could be extraordinarily highly effective when used for predictive analytics. Understanding behaviour for all customers throughout all areas of a enterprise means patterns could be discovered and used to know doubtless future behaviour. This implies you’re now not reacting to actions, like exhibiting beforehand bought gadgets on the house web page, and you’ll as an alternative present anticipated future purchases.

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about expertise throughout the Information group for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter staff in Munich enhancing simulator software program for army helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Large Information. He’s at present utilizing this expertise to assist rework the info panorama at easyfundraising.org.uk, a web-based charity cashback website, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.