Massive language fashions (LLMs) have set the company world ablaze, and everybody desires to take benefit. Actually, 47% of enterprises anticipate to extend their AI budgets this 12 months by greater than 25%, in line with a current survey of know-how leaders from Databricks and MIT Know-how Evaluate.

Regardless of this momentum, many corporations are nonetheless uncertain precisely how LLMs, AI, and machine studying can be utilized inside their very own group. Privateness and safety considerations compound this uncertainty, as a breach or hack may lead to important monetary or reputational fall-out and put the group within the watchful eye of regulators.

Nevertheless, the rewards of embracing AI innovation far outweigh the dangers. With the fitting instruments and steering organizations can rapidly construct and scale AI fashions in a non-public and compliant method. Given the affect of generative AI on the way forward for many enterprises, bringing mannequin constructing and customization in-house turns into a important functionality.

GenAI can’t exist with out knowledge governance within the enterprise

Accountable AI requires good knowledge governance. Knowledge must be securely saved, a process that grows more durable as cyber villains get extra subtle of their assaults. It should even be utilized in accordance with relevant rules, that are more and more distinctive to every area, nation, and even locality. The scenario will get difficult quick. Per the Databricks-MIT survey linked above, the overwhelming majority of huge companies are operating 10 or extra knowledge and AI techniques, whereas 28% have greater than 20.

Compounding the issue is what enterprises wish to do with their knowledge: mannequin coaching, predictive analytics, automation, and enterprise intelligence, amongst different purposes. They wish to make outcomes accessible to each worker within the group (with guardrails, in fact). Naturally, pace is paramount, so essentially the most correct insights will be accessed as rapidly as attainable.

Relying on the dimensions of the group, distributing all that data internally in a compliant method might change into a heavy burden. Which workers are allowed to entry what knowledge? Complicating issues additional, knowledge entry insurance policies are consistently shifting as workers depart, acquisitions occur, or new rules take impact.

Knowledge lineage can be necessary; companies ought to have the ability to observe who’s utilizing what data. Not figuring out the place recordsdata are situated and what they’re getting used for may expose an organization to heavy fines, and improper entry may jeopardize delicate data, exposing the enterprise to cyberattacks.

Why personalized LLMs matter

AI fashions are giving corporations the power to operationalize huge troves of proprietary knowledge and use insights to run operations extra easily, enhance current income streams and pinpoint new areas of development. We’re already seeing this in movement: within the subsequent two years, 81% of know-how leaders surveyed anticipate AI investments to lead to at the very least a 25% effectivity acquire, per the Databricks-MIT report.

For many companies, making AI operational requires organizational, cultural, and technological overhauls. It could take many begins and stops to attain a return on the time and money spent on AI, however the limitations to AI adoption will solely get decrease as {hardware} get cheaper to provision and purposes change into simpler to deploy. AI is already turning into extra pervasive inside the enterprise, and the first-mover benefit is actual.

So, what’s improper with utilizing off-the-shelf fashions to get began? Whereas these fashions will be helpful to exhibit the capabilities of LLMs, they’re additionally out there to everybody. There’s little aggressive differentiation. Workers would possibly enter delicate knowledge with out absolutely understanding how will probably be used. And since the way in which these fashions are skilled typically lacks transparency, their solutions will be based mostly on dated or inaccurate data—or worse, the IP of one other group. The most secure option to perceive the output of a mannequin is to know what knowledge went into it.

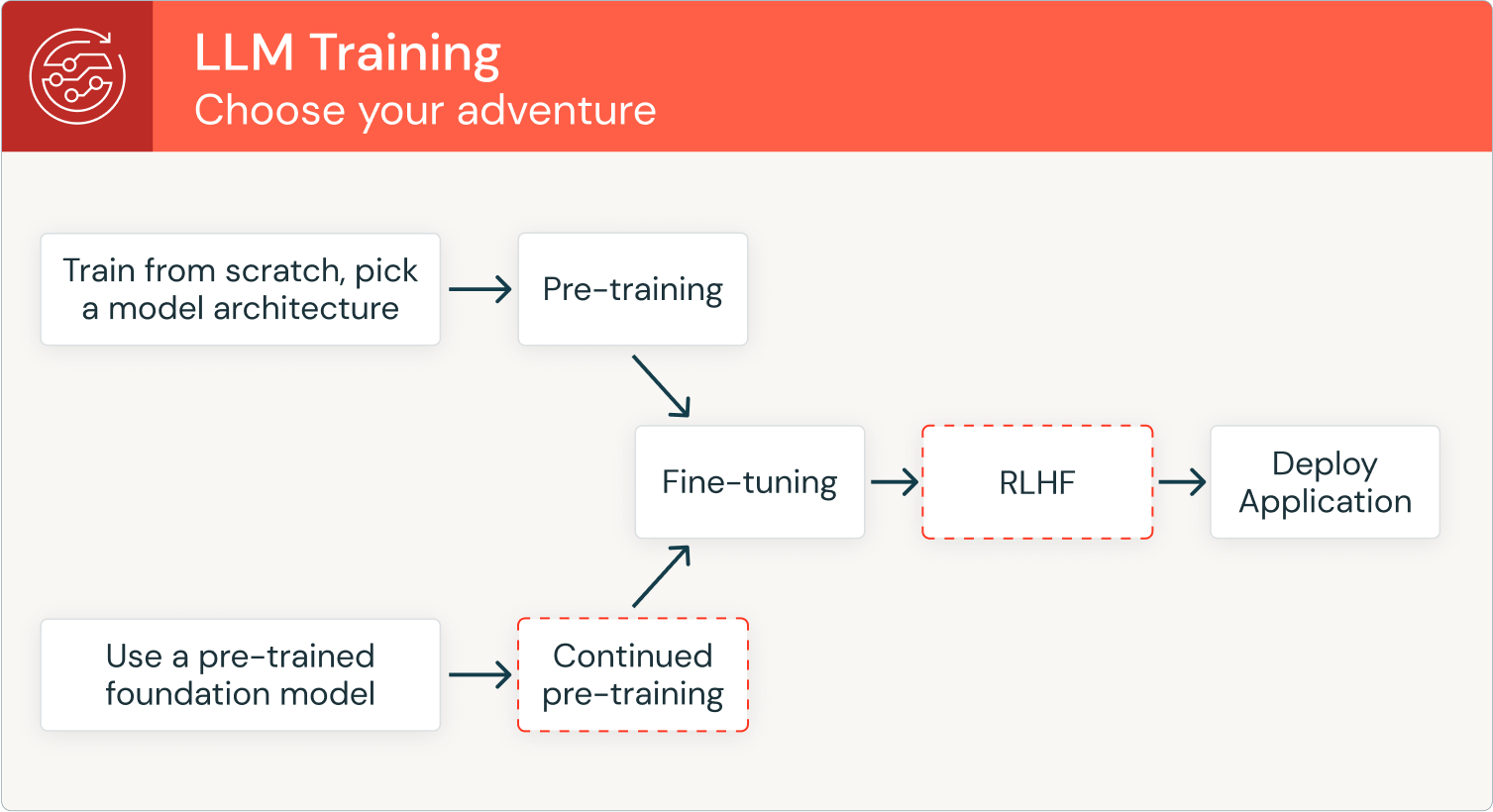

Most significantly, there’s no aggressive benefit when utilizing an off-the-shelf mannequin; in reality, creating customized fashions on priceless knowledge will be seen as a type of IP creation. AI is how an organization brings its distinctive knowledge to life. It’s too treasured of a useful resource to let another person use it to coach a mannequin that’s out there to all (together with opponents). That’s why it’s crucial for enterprises to have the power to customise or construct their very own fashions. It’s not obligatory for each firm to construct their very own GPT-4, nevertheless. Smaller, extra domain-specific fashions will be simply as transformative, and there are a number of paths to success.

LLMs and RAG: Generative AI’s jumping-off level

In a super world, organizations would construct their very own proprietary fashions from scratch. However with engineering expertise briefly provide, companies must also take into consideration supplementing their inside assets by customizing a commercially out there AI mannequin.

By fine-tuning best-of-breed LLMs as an alternative of constructing from scratch, organizations can use their very own knowledge to boost the mannequin’s capabilities. Corporations can additional improve a mannequin’s capabilities by implementing retrieval-augmented technology, or RAG. As new knowledge is available in, it’s fed again into the mannequin, so the LLM will question essentially the most up-to-date and related data when prompted. RAG capabilities additionally improve a mannequin’s explainability. For regulated industries, like healthcare, regulation, or finance, it’s important to know what knowledge goes into the mannequin, in order that the output is comprehensible — and reliable.

This strategy is a superb stepping stone for corporations which are wanting to experiment with generative AI. Utilizing RAG to enhance an open supply or best-of-breed LLM may help a corporation start to know the potential of its knowledge and the way AI may help remodel the enterprise.

Customized AI fashions: degree up for extra customization

Constructing a customized AI mannequin requires a considerable amount of data (in addition to compute energy and technical experience). The excellent news: corporations are flush with knowledge from each a part of their enterprise. (Actually, many are in all probability unaware of simply how a lot they really have.)

Each structured knowledge units—like those that energy company dashboards and different enterprise intelligence—and inside libraries that home “unstructured” knowledge, like video and audio recordsdata, will be instrumental in serving to to coach AI and ML fashions. If obligatory, organizations may complement their very own knowledge with exterior units.

Nevertheless, companies might overlook important inputs that may be instrumental in serving to to coach AI and ML fashions. Additionally they want steering to wrangle the information sources and compute nodes wanted to coach a customized mannequin. That’s the place we may help. The Knowledge Intelligence Platform is constructed on lakehouse structure to get rid of silos and supply an open, unified basis for all knowledge and governance. The MosaicML platform was designed to summary away the complexity of huge mannequin coaching and finetuning, stream in knowledge from any location, and run in any cloud-based computing surroundings.

Plan for AI scale

One widespread mistake when constructing AI fashions is a failure to plan for mass consumption. Usually, LLMs and different AI tasks work effectively in take a look at environments the place the whole lot is curated, however that’s not how companies function. The true world is way messier, and corporations want to think about elements like knowledge pipeline corruption or failure.

AI deployments require fixed monitoring of knowledge to ensure it’s protected, dependable, and correct. More and more, enterprises require an in depth log of who’s accessing the information (what we name knowledge lineage).

Consolidating to a single platform means corporations can extra simply spot abnormalities, making life simpler for overworked knowledge safety groups. This now-unified hub can function a “supply of reality” on the motion of each file throughout the group.

Don’t neglect to guage AI progress

The one approach to ensure AI techniques are persevering with to work accurately is to consistently monitor them. A “set-it-and-forget-it” mentality doesn’t work.

There are all the time new knowledge sources to ingest. Issues with knowledge pipelines can come up ceaselessly. A mannequin can “hallucinate” and produce dangerous outcomes, which is why corporations want a knowledge platform that enables them to simply monitor mannequin efficiency and accuracy.

When evaluating system success, corporations additionally must set life like parameters. For instance, if the aim is to streamline customer support to alleviate workers, the enterprise ought to observe what number of queries nonetheless get escalated to a human agent.

To learn extra about how Databricks helps organizations observe the progress of their AI tasks, take a look at these items on MLflow and Lakehouse Monitoring.

Conclusion

By constructing or fine-tuning their very own LLMs and GenAI fashions, organizations can acquire the arrogance that they’re counting on essentially the most correct and related data attainable, for insights that ship distinctive enterprise worth.

At Databricks, we imagine within the energy of AI on knowledge intelligence platforms to democratize entry to customized AI fashions with improved governance and monitoring. Now could be the time for organizations to make use of Generative AI to show their priceless knowledge into insights that result in improvements. We’re right here to assist.

Be a part of this webinar to be taught extra about find out how to get began with and construct Generative AI options on Databricks!