Amazon Internet Providers introduced an AI chatbot for enterprise use, new generations of its AI coaching chips, expanded partnerships and extra throughout AWS re:Invent, held from November 27 to December 1, in Las Vegas.

The main focus of AWS CEO Adam Selipsky’s keynote held on day two of the convention was on generative AI and methods to allow organizations to coach highly effective fashions by way of cloud companies.

Bounce to:

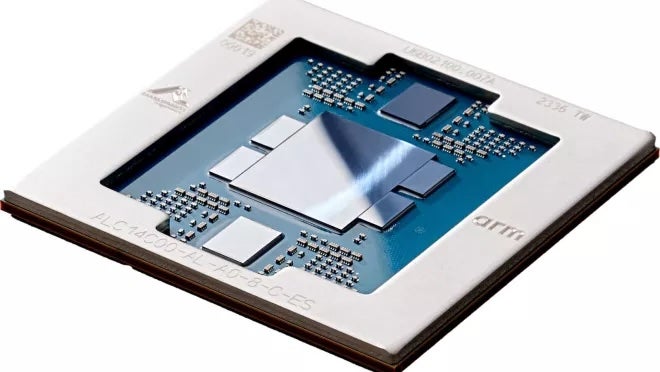

Graviton4 and Trainium2 chips introduced

AWS introduced new generations of its Graviton chips, that are server processors for cloud workloads and Trainium, which gives compute energy for AI basis mannequin coaching.

Graviton4 (Determine A) has 30% higher compute efficiency, 50% extra cores and 75% extra reminiscence bandwidth than Graviton3, Selipsky mentioned. The primary occasion primarily based on Graviton4 would be the R8g Situations for EC2 for memory-intensive workloads, obtainable by way of AWS.

Trainium2 is coming to Amazon EC2 Trn2 cases, and every occasion will have the ability to scale as much as 100,000 Trainium2 chips. That gives the flexibility to coach a 300-billion parameter massive language mannequin in weeks, AWS said in a press launch.

Determine A

Anthropic will use Trainium and Amazon’s high-performance machine studying chip Inferentia for its AI fashions, Selipsky and Dario Amodei, chief government officer and co-founder of Anthropic, introduced. These chips could assist Amazon muscle into Microsoft’s area within the AI chip market.

Amazon Bedrock: Content material guardrails and different options added

Selipsky made a number of bulletins about Amazon Bedrock, the inspiration mannequin constructing service, throughout re:Invent:

- Brokers for Amazon Bedrock are usually obtainable in preview right this moment.

- Customized fashions constructed with bespoke fine-tuning and ongoing pretraining are open in preview for patrons within the U.S. right this moment.

- Guardrails for Amazon Bedrock are coming quickly; Guardrails lets organizations conform Bedrock to their very own AI content material limitations utilizing a pure language wizard.

- Information Bases for Amazon Bedrock, which bridge basis fashions in Amazon Bedrock to inner firm knowledge for retrieval augmented technology, are actually usually obtainable within the U.S.

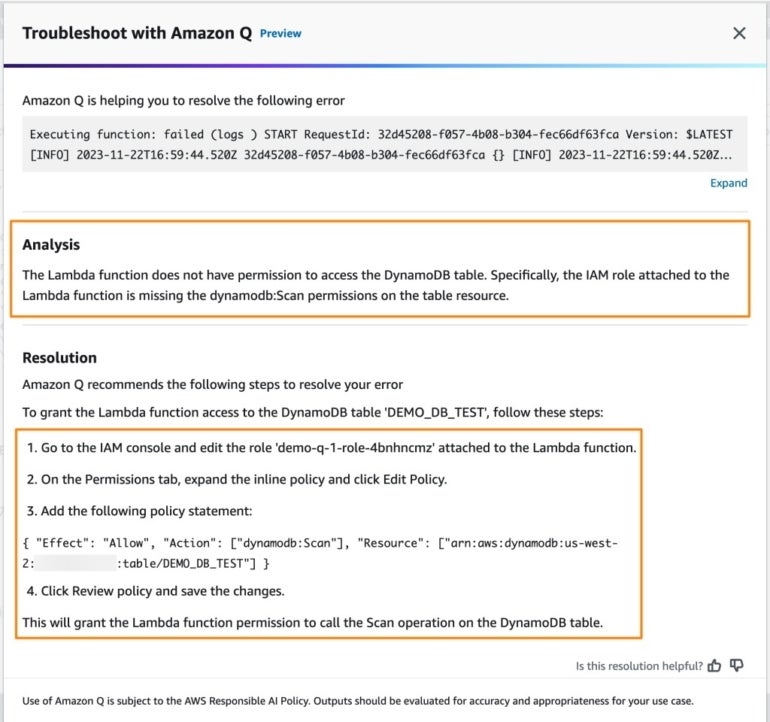

Amazon Q: Amazon enters the chatbot race

Amazon launched its personal generative AI assistant, Amazon Q, designed for pure language interactions and content material technology for work. It might probably match into current identities, roles and permissions in enterprise safety permissions.

Amazon Q can be utilized all through a company and may entry a variety of different enterprise software program. Amazon is pitching Amazon Q as business-focused and specialised for particular person staff who could ask particular questions on their gross sales or duties.

Amazon Q is very fitted to builders and IT professionals working inside AWS CodeCatalyst as a result of it could assist troubleshoot errors or community connections. Amazon Q will exist within the AWS administration console and documentation inside CodeWhisperer, within the serverless computing platform AWS Lambda, or in office communication apps like Slack (Determine B).

Determine B

Amazon Q has a characteristic that permits software builders to replace their purposes utilizing pure language directions. This characteristic of Amazon Q is on the market in preview in AWS CodeCatalyst right this moment and can quickly be coming to supported built-in improvement environments.

SEE: Knowledge governance is without doubt one of the many components that must be thought-about throughout generative AI deployment. (TechRepublic)

Many Amazon Q options inside different Amazon companies and merchandise can be found in preview right this moment. For instance, contact heart directors can entry Amazon Q in Amazon Join now.

Amazon S3 Categorical One Zone opens its doorways

The Amazon S3 Categorical One Zone, now in normal availability, is a brand new S3 storage class purpose-built for high-performance and low-latency cloud object storage for frequently-accessed knowledge, Selipsky mentioned. It’s designed for workloads that require single-digit millisecond latency comparable to finance or machine studying. Immediately, prospects transfer knowledge from S3 to customized caching options; with the Amazon S3 Categorical One Zone, they will select their very own geographical availability zone and produce their ceaselessly accessed knowledge subsequent to their high-performance computing. Selipsky mentioned Amazon S3 Categorical One Zone could be run with 50% decrease entry prices than the usual Amazon S3.

Salesforce CRM obtainable on AWS Market

On Nov. 27, AWS introduced Salesforce’s partnership with Amazon will broaden to sure Salesforce CRM merchandise accessed on AWS Market. Particularly, Salesforce’s Knowledge Cloud, Service Cloud, Gross sales Cloud, Trade Clouds, Tableau, MuleSoft, Platform and Heroku will probably be obtainable for joint prospects of Salesforce and AWS within the U.S. Extra merchandise are anticipated to be obtainable, and the geographical availability is anticipated to be expanded subsequent 12 months.

New choices embody:

- The Amazon Bedrock AI service will probably be obtainable inside Salesforce’s Einstein Belief Layer.

- Salesforce Knowledge Cloud will assist knowledge sharing throughout AWS applied sciences together with Amazon Easy Storage Service.

“Salesforce and AWS make it simple for builders to securely entry and leverage knowledge and generative AI applied sciences to drive speedy transformation for his or her organizations and industries,” Selipsky mentioned in a press launch.

Conversely, AWS will probably be utilizing Salesforce merchandise comparable to Salesforce Knowledge Cloud extra typically internally.

Amazon removes ETL from extra Amazon Redshift integrations

ETL could be a cumbersome a part of coding with transactional knowledge. Final 12 months, Amazon introduced a zero-ETL integration between Amazon Aurora, MySQL and Amazon Redshift.

Immediately AWS launched extra zero-ETL integrations with Amazon Redshift:

- Aurora PostgreSQL

- Amazon RDS for MySQL

- Amazon DynamoDB

All three can be found globally in preview now.

The subsequent factor Amazon wished to do is make search in transactional knowledge extra easy; many individuals use Amazon OpenSearch Service for this. In response, Amazon introduced DynamoDB zero-ETL with OpenSearch Service is on the market right this moment.

Plus, in an effort to make knowledge extra discoverable in Amazon DataZone, Amazon added a brand new functionality so as to add enterprise descriptions to knowledge units utilizing generative AI.

Introducing Amazon One Enterprise authentication scanner

Amazon One Enterprise permits safety administration for entry to bodily places in industries comparable to hospitality, training or applied sciences. It’s a fully-managed on-line service paired with the AWS One palm scanner for biometric authentication administered by way of the AWS Administration Console. Amazon One Enterprise is presently obtainable in preview within the U.S.

NVIDIA and AWS make cloud pact

NVIDIA introduced a brand new set of GPUs obtainable by way of AWS, the NVIDIA L4 GPUs, NVIDIA L40S GPUs and NVIDIA H200 GPUs. AWS would be the first cloud supplier to convey the H200 chips with NV hyperlink to the cloud. By way of this hyperlink, the GPU and CPU can share reminiscence to hurry up processing, NVIDIA CEO Jensen Huang defined throughout Selipsky’s keynote. Amazon EC2 G6e cases that includes NVIDIA L40S GPUs and Amazon G6 cases powered by L4 GPUs will begin to roll out in 2024.

As well as, the NVIDIA DGX Cloud, NVIDIA’s AI constructing platform, is coming to AWS. A precise date for its availability hasn’t but been introduced.

NVIDIA introduced on AWS as a main associate in Challenge Ceiba, NVIDIA’s 65 exaflop supercomputer together with 16,384 NVIDIA GH200 Superchips.

NVIDIA NeMo Retriever

One other announcement made throughout re:Invent is the NVIDIA NeMo Retriever, which permits enterprise prospects to offer extra correct responses from their multimodal generative AI purposes utilizing retrieval-augmented technology.

Particularly, NVIDIA NeMo Retriever is a semantic-retrieval microservice that connects customized LLMs to purposes. NVIDIA NeMo Retriever’s embedding fashions decide the semantic relationships between phrases. Then, that knowledge is fed into an LLM, which processes and analyzes the textual knowledge. Enterprise prospects can join that LLM to their very own knowledge sources and data bases.

NVIDIA NeMo Retriever is on the market in early entry now by way of the NVIDIA AI Enterprise Software program platform wherever it may be accessed by way of the AWS Market.

Early companions working with NVIDIA on retrieval-augmented technology companies embody Cadence, Dropbox, SAP and ServiceNow.

Word: TechRepublic is masking AWS re:Invent nearly.