Generative AI (synthetic intelligence) brings nice advantages to society and business. We’re seeing the rise of such expertise in aviation and airways, energy and utilities, and even locations like on the dentist. Information is the brand new oil, and if firms can seize and capitalize on it, then they’ve a leg up in as we speak’s aggressive, labor-constrained market. Nonetheless, there are challenges that stay.

Maybe the most important problem is the protection of all of it. Cybersecurity is a big concern for a lot of companies, as they leverage new, rising applied sciences, however digging a bit deeper there are different security considerations to think about as properly.

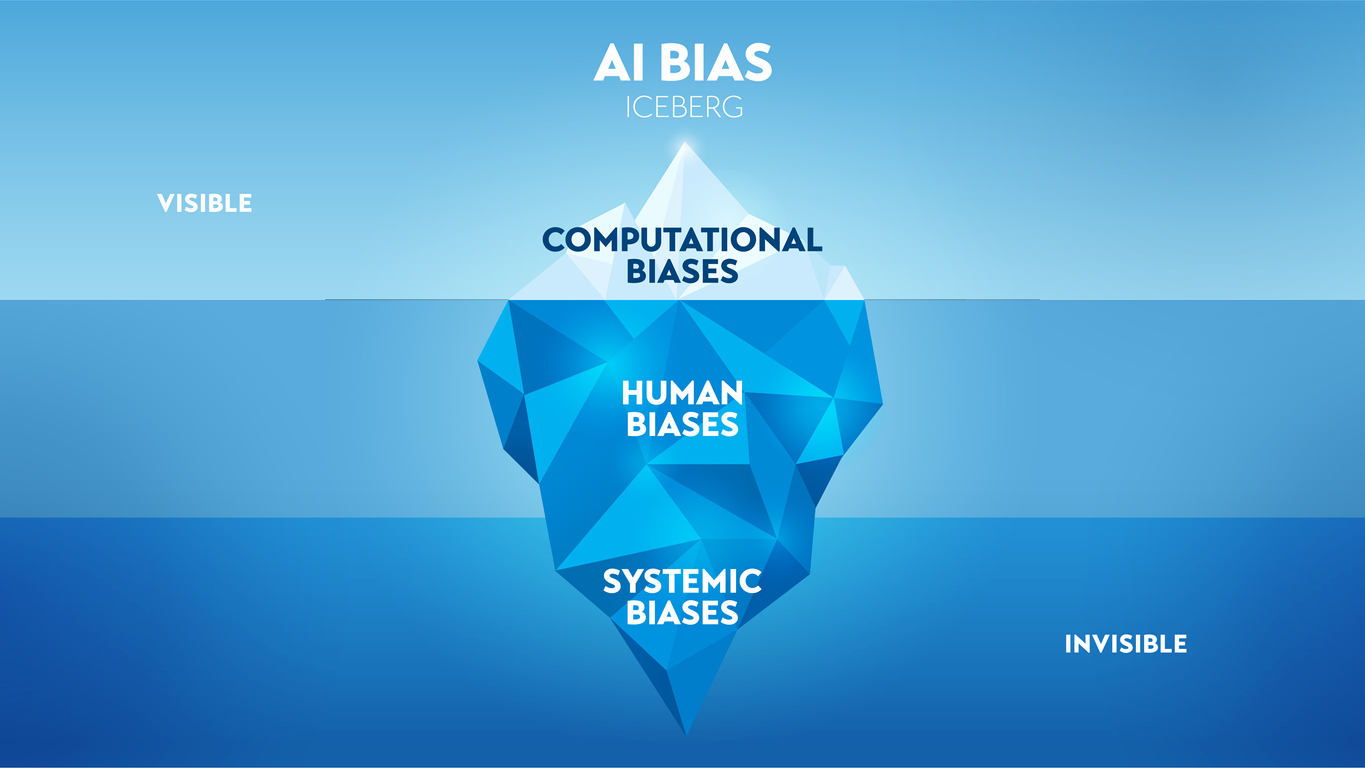

Misinformation and bias might be simply as harmful. Contemplate healthcare. A lot of the information that exists within the healthcare business as we speak relies on those that may afford it previously. This implies lower-income households or growing nations merely don’t have the information, which skews the pattern.

After which, there may be misinformation that may come due to generative AI. As a journalist, I understand how essential truth checking is on any venture as a result of misinformation is in every single place—and I imply in every single place. Final 12 months, USC (College of Southern California) researchers discovered bias exists in as much as 38.6% of info utilized by synthetic intelligence. That’s one thing we merely can’t ignore.

Many organizations acknowledge these considerations and others because it pertains to security and synthetic intelligence—and a few are taking steps to deal with it. Contemplate the instance of MLCommons, which is an AI benchmarking group. On the finish of October, it introduced the creation of the AI Security Working Group, which is able to develop a platform and pool of exams from many contributors to help AI security benchmarks for various use instances.

The AIS working group’s preliminary participation features a multi-disciplinary group of AI consultants together with: Anthropic, Coactive AI, Google, Inflection, Intel, Meta, Microsoft, NVIDIA, OpenAI, Qualcomm Applied sciences, Inc., and lecturers Joaquin Vanstoren from Eindhoven College of Know-how, Percy Liang from Stanford College, and Bo Li from the College of Chicago. Participation within the working group is open to educational and business researchers and engineers, in addition to area consultants from civil society and the general public sector.

For example, Intel plans to share AI security findings and finest practices and processes for accountable growth resembling red-teaming and security exams. As a founding member, Intel will contribute its experience and information to assist create a versatile platform for benchmarks that measure the protection and threat components of AI instruments and fashions.

All in all, the brand new platform will help defining benchmarks that choose from the pool of exams and summarize the outputs into helpful, understandable scores. That is similar to what’s customary in different industries resembling automotive security check rankings and power star scores.

Essentially the most urgent precedence right here for the group to start with shall be supporting fast evolution of extra rigorous and dependable AI security testing expertise. The AIS working group will draw upon the technical and operational experience of its members, and the bigger AI group, to assist information and create the AI security benchmarking applied sciences.

One of many preliminary focuses shall be growing security benchmarks for LLMs (massive language fashions), which is able to construct on the work carried out by researchers at Stanford College’s Heart for Analysis on Basis Fashions and its HELM (Holistic Analysis of Language Fashions).

Whereas that is merely one instance, it’s a step in the precise route towards making AI safer for all, addressing lots of the considerations associated to misinformation and bias that exist amongst many industries. Because the testing matures, we could have extra alternatives to make use of AI in a manner that’s secure for all. The long run actually is shiny.

Need to tweet about this text? Use hashtags #IoT #sustainability #AI #5G #cloud #edge #futureofwork #digitaltransformation #inexperienced #ecosystem #environmental #circularworld