Generative synthetic intelligence (AI) can seem to be a magic genie. So maybe it’s no shock that individuals use it like one—by describing their “needs” in pure language, utilizing textual content prompts. In spite of everything, what person interface could possibly be extra versatile and highly effective than merely telling software program what you need from it?

Because it seems, so-called “pure language” nonetheless causes critical usability issues. Famend UX researcher Jakob Nielsen, co-founder of the Nielsen Norman Group, calls it the articulation barrier: For a lot of customers, describing their intent in writing—with sufficient readability and specificity to supply helpful outputs from generative AI—is simply too laborious. “Almost certainly, half the inhabitants can’t do it,” Nielsen writes.

On this roundtable dialogue, 4 Toptal designers clarify why textual content prompts are so tough, and share their options for fixing generative AI’s “clean web page” downside. These consultants are on the forefront of leveraging the most recent applied sciences to enhance design. Collectively, they create a spread of design experience to this dialogue of the way forward for AI prompting. Damir Kotorić has led design tasks for purchasers like Reserving.com and the Australian authorities, and was the lead UX teacher at Common Meeting. Darwin Álvarez at present leads UX tasks for Mercado Libre, certainly one of Latin America’s main e-commerce platforms. Darrell Estabrook has greater than 25 years of expertise in digital product design for enterprise purchasers like IBM, CSX, and CarMax. Edward Moore has greater than 20 years of UX design expertise on award-winning tasks for Google, Sony, and Digital Arts.

This dialog has been edited for readability and size.

To start, what do you contemplate to be the largest weak point of textual content prompting for generative AI?

Damir Kotorić: At the moment, it’s a one-way avenue. Because the immediate creator, you’re nearly anticipated to create an immaculate conception of a immediate to attain your required consequence. This isn’t how creativity works, particularly within the digital age. The large good thing about Microsoft Phrase over a typewriter is that you could simply edit your creation in Phrase. It’s ping-pong, back-and-forth. You strive one thing, you then get some suggestions out of your consumer or colleague, you then pivot once more. On this regard, the present AI instruments are nonetheless primitive.

Darwin Álvarez: Textual content prompting isn’t versatile. Generally, I’ve to know precisely what I would like, and it’s not a progressive course of the place I can iterate and develop an thought I like. I’ve to go in a linear route. However once I use generative AI, I typically solely have a imprecise thought of what I would like.

Edward Moore: The beauty of language prompting is that speaking and typing are pure types of expression for many people. However one factor that makes it very difficult is that the biases you embody in your writing can skew the outcomes. For instance, if you happen to ask ChatGPT whether or not or not assistive robots are an efficient remedy for adults with dementia, it is going to generate solutions that assume that the reply is “sure” simply since you used the phrase “efficient” in your immediate. Chances are you’ll get wildly completely different or doubtlessly unfaithful outputs primarily based on refined variations in the way you’re utilizing language. The necessities for being efficient at utilizing generative AI are fairly steep.

Darrell Estabrook: Like Damir and Darwin mentioned, the back-and-forth isn’t fairly there with textual content prompts. It can be laborious to translate visible creativity into phrases. There’s a motive why they are saying an image’s value a thousand phrases. You nearly want that many phrases to get one thing fascinating from a generative AI device!

Moore: Proper now, the know-how is extremely pushed by information scientists and engineers. The tough edges have to be filed down, and one of the best ways to try this is to democratize the tech and embody UX designers within the dialog. There’s a quote from Mark Twain, “Historical past doesn’t repeat itself, however it positive does rhyme.” And I feel that’s acceptable right here as a result of all of a sudden, it’s like we’ve returned to the command line period.

Do you suppose most of the people will nonetheless be utilizing textual content prompts as the primary approach of interacting with generative AI in 5 years?

Moore: The interfaces for prompting AI will grow to be extra visible, in the identical approach that website-building instruments put a GUI layer on prime of uncooked HTML. However I feel that the textual content prompts will all the time be there. You possibly can all the time manually write HTML if you wish to, however most individuals don’t have the time for it. Changing into extra visible is one attainable approach interfaces would possibly evolve.

Estabrook: There are completely different paths for this to go. Textual content enter is restricted. One risk is to include physique language, which performs an enormous half in speaking our intent. Wouldn’t or not it’s an fascinating use of a digicam and AI recognition to think about our physique language as a part of a immediate? One of these tech would even be useful in all types of AI-driven apps. As an example, it could possibly be utilized in a medical app to evaluate a affected person’s demeanor or psychological state.

What are some extra usability limitations round textual content prompting, and what are particular methods for addressing them?

Kotorić: The present era of AI instruments is a black field. The machine waits for person enter, and as soon as it has produced the output, little to no tweaking will be accomplished. You’ve acquired to begin over again in order for you one thing a bit completely different. What must occur is that these magic algorithms have to be opened up. And we want levers to granularly management every stylistic facet of the output in order that we are able to iterate to perfection as a substitute of being required to forged the proper spell first.

Álvarez: As a local Spanish speaker, I’ve seen how these instruments are optimized for English, and I feel that has the potential to undermine belief amongst non-native English audio system. In the end, customers shall be extra prone to belief and have interaction with AI instruments after they can use a language they’re snug with. Making generative AI multilingual at scale will in all probability require placing AI fashions via intensive coaching and testing, and adapting their responses to cultural nuances.

One other barrier to belief is that it’s not possible to know the way the AI created its output. What supply materials was it educated on? Why did it manage or compose the output the best way it did? How did my immediate have an effect on the consequence? Customers must know this stuff to find out whether or not an final result is dependable.

AI instruments ought to present details about the sources used to generate a response, together with hyperlinks or citations to related paperwork or web sites. This might assist customers confirm the knowledge independently. Even assigning some confidence scores to its responses would inform customers concerning the degree of certainty the device has in its reply. If the arrogance rating is low, customers could take the response as a place to begin for additional analysis.

Estabrook: I’ve had some awful outcomes with picture era. As an example, I copied the precise immediate for picture examples I discovered on-line, and the outcomes have been drastically completely different. To beat that, prompting must be much more reliant on a back-and-forth course of. As a artistic director working with different designers on a workforce, we all the time travel. They produce one thing, then we overview it: “That is good. Strengthen that. Take away this.” You want that at a picture degree.

A UI technique could possibly be to have the device clarify a few of its selections. Perhaps allow it to say, “I put this blob right here considering that’s what you meant by this immediate.” And I may say, “Oh, that factor? No, I meant this different factor.” Now I’ve been in a position to be extra descriptive as a result of the AI and I’ve a standard body of reference. Whereas proper now, you’re simply randomly throwing out concepts and hoping to land on one thing.

How can design assist enhance the accuracy of generative AI responses to textual content prompts?

Álvarez: If one of many limitations of prompting is that customers don’t all the time know what they need, we are able to use a heuristic known as recognition quite than recall. We don’t need to drive customers to outline or bear in mind precisely what they need; we may give them concepts and clues that may assist them get to a selected level.

We are able to additionally differentiate and customise the interplay design for somebody who is extra clear on what they need versus a beginner person who will not be very tech-savvy. This could possibly be a extra easy strategy.

Estabrook: One other thought is to “reverse the authority.” Don’t make AI appear so authoritative in your app. It gives ideas and prospects, however that doesn’t mitigate the truth that a kind of choices could possibly be wildly incorrect.

Moore: I agree with Darrell. If corporations are attempting to current AI as this authoritative factor, we should bear in mind, who’re the genuine brokers on this interplay? It’s the people. We have now the decision-making energy. We determine how and when to maneuver issues ahead.

My dream usability enchancment is, “Hey, can I’ve a button subsequent to the output to immediately flag hallucinations?” AI picture turbines resolved the hand downside, so I feel the hallucination downside shall be mounted. However we’re on this intermediate interval the place there’s no interface so that you can say, “Hey, that’s inaccurate.”

We have now to have a look at AI as an assistant that we are able to prepare over time, very like you’d any actual assistant.

What different UI options may complement or exchange textual content prompting?

Álvarez: As a substitute of forcing customers to write down or give an instruction, they might reply a survey, kind, or multistep questionnaire. This might assist when you’re in entrance of a clean textual content area and don’t know find out how to write AI prompts.

Moore: Sure, some options may present potential choices quite than making the person take into consideration them. I imply, that’s what AI is meant to do, proper? It’s supposed to scale back cognitive load. So the instruments ought to try this as a substitute of demanding extra cognitive load.

Kotorić: Creativity is a multiplayer sport, however the present generative AI instruments are single-player video games. It’s simply you writing a immediate. There’s no approach for a workforce to collaborate on creating the answer immediately within the AI device. We’d like methods for AI and different teammates to fork concepts and discover different prospects with out dropping work. We basically must Git-ify this artistic course of.

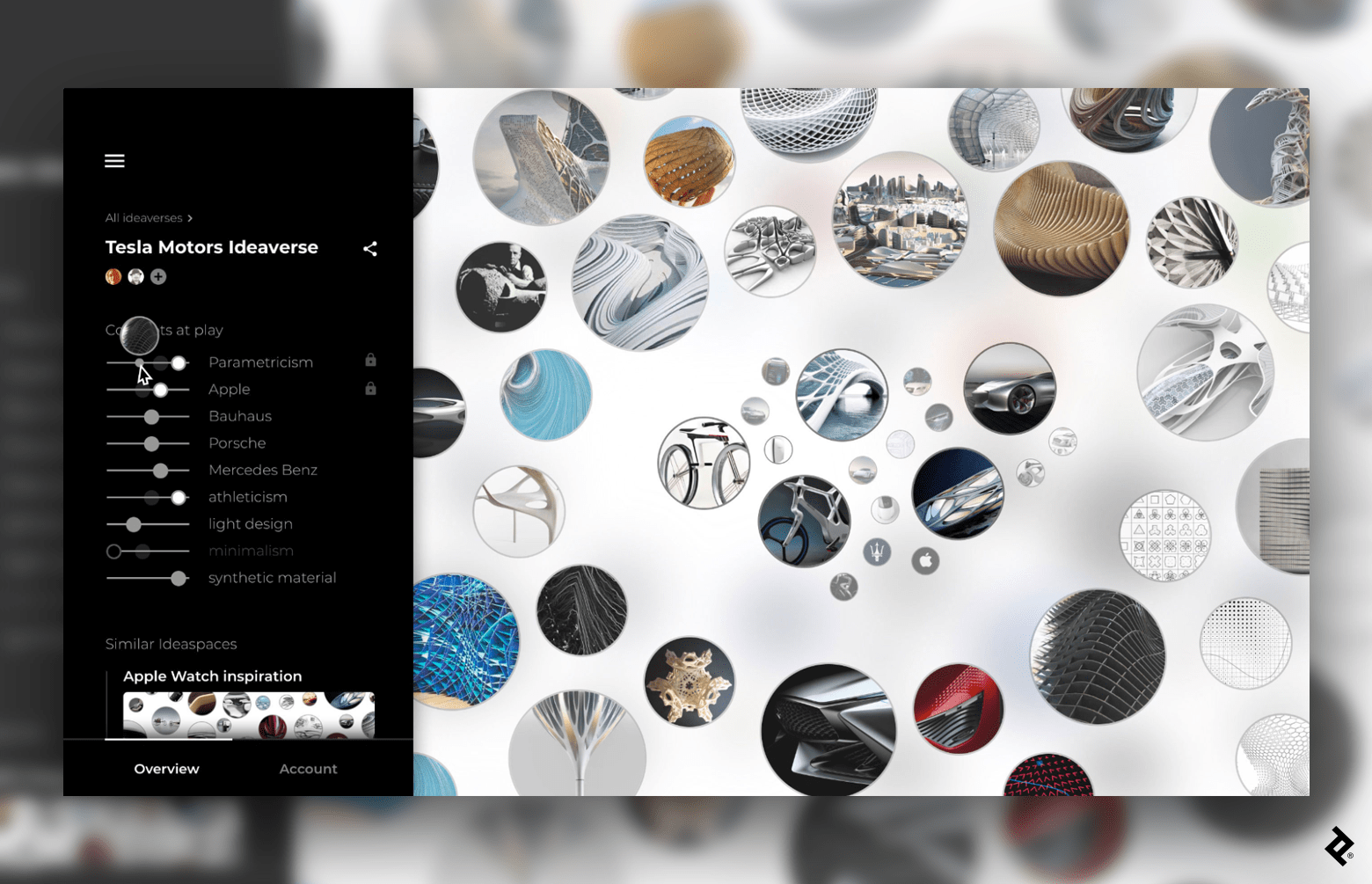

I explored such an answer with a consumer years in the past. We got here up with the idea of an “Ideaverse.” Whenever you tweaked the artistic parameters on the left sidebar, you’d see the output replace to higher match what you have been after. You would additionally zoom in on a artistic route and zoom out to see a broader suite of artistic choices.

Midjourney permits for this type of specificity utilizing immediate weights, however it’s a sluggish course of: You must manually create a collection of weights and generate the output, then tweak and generate once more, tweak and generate once more. It appears like restarting the artistic course of every time, as a substitute of one thing you possibly can rapidly tweak on the fly as you’re narrowing in in your artistic route.

In my consumer’s Ideaverse that I discussed, we additionally included a Github-like model management characteristic the place you would see a “commit historical past” under no circumstances dissimilar to Figma’s model historical past, which additionally means that you can see how a file has modified over time and precisely who made which adjustments.

Let’s speak about particular use circumstances. How would you enhance the AI prompt-writing expertise for a text-generation process reminiscent of making a doc?

Álvarez: If AI will be predictable—like in Gmail, the place I see the prediction of the textual content I’m about to write down—then that’s once I would use it as a result of I can see the consequence that works for me. However a clean doc template that AI fills in—I wouldn’t use that as a result of I don’t know what to anticipate. So if AI could possibly be sensible sufficient to grasp what I’m writing in actual time and supply me an possibility that I can see and use immediately, that will be useful.

Estabrook: I’d nearly prefer to see it displayed equally to tracked adjustments and feedback in a doc. It’d be neat to see AI feedback pop up as I write, perhaps within the margin. It takes away that authority as if the AI-generated materials would be the closing textual content. It simply implies, “Listed below are some ideas”; this could possibly be helpful if you happen to’re making an attempt to craft one thing, not simply generate one thing by rote.

Or there could possibly be selectable textual content sections the place you would say, “Give me some alternate options for additional content material.” Perhaps it provides me analysis if I wish to know extra about this or that topic I’m writing about.

Moore: It’d be nice if you happen to may say, “Hey, I’m going to focus on this paragraph, and now I would like you to write down it from the perspective of a distinct character.” Or “I want you to rephrase that in a approach that may apply to individuals of various ages, schooling ranges, backgrounds,” issues like that. Simply having that form of nuance would go an extended technique to bettering usability.

If we generate every little thing, the consequence loses its authenticity. Folks crave that human contact. Let’s speed up that first 90% of the duty, however everyone knows that the final 10% takes 90% of the trouble. That’s the place we are able to add our little contact that makes it distinctive. Folks like that: They like wordsmithing, they like writing.

Can we wish to give up that utterly to AI? Once more, it depends upon intent and context. You in all probability need extra artistic management if you happen to’re writing for pleasure or to inform a narrative. However if you happen to’re similar to, “I wish to create a backlog of social media posts for the following three months, and I don’t have the time to do it,” then AI is an efficient possibility.

How may textual content prompting be improved for producing pictures, graphics, and illustrations?

Estabrook: I wish to feed it visible materials, not simply textual content. Present it a bunch of examples of the model model and different inspiration pictures. We try this already with colour: Add a photograph and get a palette. Once more, you’ve acquired to have the ability to travel to get what you need. It’s like saying, “Go make me a sandwich.” “OK, what type?” “Roast beef, and you already know what extras I like.” That form of factor.

Álvarez: I used to be lately concerned in a venture for a sport company utilizing an AI generator for 3D objects. The problem was creating textures for a sport the place it’s not economical to begin from scratch each time. So the company created a backlog, a financial institution of data associated to all the sport’s property. And it’ll use this backlog—current textures, current fashions—as a substitute of textual content prompts to generate constant outcomes for a brand new mannequin or character.

Kotorić: We made an experiment known as AI Design Generator, which allowed for stay tweaking of a visible route utilizing sliders in a GUI.

This lets you combine completely different artistic instructions and have the AI create a number of intermediate states between these two instructions. Once more, that is attainable with the present AI text-prompting instruments, however it’s a sluggish and mundane handbook course of. You want to have the ability to learn via Midjourney docs and comply with tutorials on-line, which is tough for almost all of the final inhabitants. If the AI itself begins suggesting concepts, it might open new artistic prospects and democratize the method.

Moore: I feel the way forward for this—if it doesn’t exist already—is with the ability to select what’s going to get fed into the machine. So you possibly can specify, “These are the issues that I like. That is the factor that I’m making an attempt to do.” Very like you’d if you happen to have been working with an assistant, junior artist, or graphic designer. Perhaps some sliders are concerned; then it generates the output, and you’ll flag elements, saying, “OK, I like this stuff. Regenerate it.”

What would a greater generative AI interface seem like for video, the place you need to management shifting pictures over time?

Moore: Once more, I feel quite a lot of it comes right down to with the ability to flag issues—“I like this, I don’t like this”—and being able to protect these preferences within the video timeline. As an example, you would click on a lock icon on prime of the photographs you want so that they don’t get regenerated in subsequent iterations. I feel that will assist so much.

Estabrook: Proper now, it’s like a hose: You flip it on full blast, and the tip of it begins going in every single place. I used Runway to make a scene of an asteroid belt with the solar rising from behind one of many asteroids because it passes in entrance of the digicam. I attempted to explain that in a textual content immediate and acquired these very trippy blobs shifting in house. So there must be a degree of sophistication within the locking mechanism that’s as superior because the AI to get throughout what you need. Like, “No, hold the asteroid right here. Now transfer the solar a bit bit to the appropriate.”

Álvarez: Simply because the device can generate the ultimate consequence doesn’t imply we have to leap straight from the concept to the ultimate consequence. There are steps within the center that AI ought to contemplate, like storyboards, that assist me make choices and progressively refine my ideas in order that I’m not shocked by an output I didn’t need. I feel with video, contemplating these center steps is essential.

Wanting towards the longer term, what rising applied sciences may enhance the AI prompting person expertise?

Moore: I do quite a lot of work in digital and augmented actuality, and people realms deal way more with utilizing human our bodies as enter mechanisms; for example, they’ve eye sensors so you should utilize your eyeballs as an enter mechanism. I additionally suppose utilizing photogrammetry or depth-sensing to seize information about individuals in environments shall be used to steer AI interfaces in an thrilling approach. An instance is the “AI pin” system from a startup known as Humane. It’s just like the little communicators they’d faucet on Star Trek: The Subsequent Era, besides it’s an AI-powered assistant with cameras, sensors, and microphones that may venture pictures onto close by surfaces like your hand.

I additionally do quite a lot of work with accessibility, and we frequently speak about how AI will develop company for individuals. Think about you probably have motor points and don’t have the usage of your arms. You’re minimize off from an entire realm of digital expertise as a result of you possibly can’t use a keyboard or mouse. Advances in speech recognition have enabled individuals to talk their prompts into AI artwork turbines like Midjourney to create imagery. Placing apart the moral issues of how AI artwork turbines perform and the way they’re educated, they nonetheless allow a brand new digital interplay beforehand unavailable to customers with accessibility wants.

Extra types of AI interplay shall be attainable for customers with accessibility limitations as soon as eye monitoring—present in higher-end VR headsets like PlayStation VR2, Meta Quest Professional, and Apple Imaginative and prescient Professional—turns into extra commonplace. This may basically let customers set off interactions by detecting the place their eyes are wanting.

So these sorts of enter mechanisms, enabled by cameras and sensors, will all emerge. And it’s going to be thrilling.