On this weblog we’ll reveal with examples, how one can seamlessly improve your Hive metastore (HMS)* tables to Unity Catalog (UC) utilizing totally different methodologies relying on the variations of HMS tables being upgraded.

*Be aware: Hive metastore may very well be your default or exterior metastore and even AWS Glue Information Catalog. For simplicity, we’ve used the time period “Hive metastore” all through this doc

Earlier than we dive into the small print, allow us to have a look at the steps we’d take for the improve –

- Assess – On this step, you’ll consider the present HMS tables recognized for improve in order that we will decide the appropriate strategy for improve. This step is mentioned on this weblog.

- Create – On this step, you create the required UC property comparable to, Metastore, Catalog, Schema, Storage Credentials, Exterior Areas. For particulars discuss with the documentations – AWS, Azure, GCP

- Improve – On this step, you’ll comply with the steering to improve the tables from HMS to UC. This step is mentioned on this weblog.

- Grant – On this step, you will want to supply grants on the newly upgraded UC tables to principals, in order that they’ll entry the UC tables. For element discuss with the documentations – AWS, Azure, GCP

Unity Catalog, now usually accessible on all three cloud platforms (AWS, Azure, and GCP), simplifies safety and governance of your information with the next key options:

- Outline as soon as, safe in every single place: Unity Catalog presents a single place to manage information entry insurance policies that apply throughout all workspaces.

- Requirements-compliant safety mannequin: Unity Catalog’s safety mannequin is predicated on normal ANSI SQL and permits directors to grant permissions of their current information lake utilizing acquainted syntax, on the stage of catalogs, databases (additionally referred to as schemas), tables, and views.

- Constructed-in auditing and lineage: Unity Catalog mechanically captures user-level audit logs that file entry to your information. Unity Catalog additionally captures lineage information that tracks how information property are created and used throughout all languages.

- Information discovery: Unity Catalog allows you to tag and doc information property, and supplies a search interface to assist information customers discover information.

- System tables (Public Preview): Unity Catalog allows you to simply entry and question your account’s operational information, together with audit logs, billable utilization, and lineage.

- Information Sharing: Delta Sharing is an open protocol developed by Databricks for safe information sharing with different organizations whatever the computing platforms they use. Databricks has constructed Delta Sharing into its Unity Catalog information governance platform, enabling a Databricks consumer, referred to as an information supplier, to share information with an individual or group outdoors of their group, referred to as an information recipient.

All these wealthy options which can be found with Unity Catalog (UC) out of the field are not available in your Hive metastore at the moment and would take an enormous quantity of your assets to construct floor up. Moreover, as most (if not all) of the newer Databricks options, comparable to, Lakehouse Monitoring, Lakehouse Federation, LakehouseIQ, are constructed on, ruled by and wishes Unity Catalog as a prerequisite to operate, delaying upgradation of your information property to UC from HMS would restrict your potential to reap the benefits of these newer product options.

Therefore, one query that involves thoughts is how will you simply improve tables registered in your current Hive metastore to the Unity Catalog metastore with the intention to reap the benefits of all of the wealthy options Unity Catalog presents. On this weblog, we’ll stroll you thru concerns, methodologies with examples for upgrading your HMS desk to UC.

Improve Issues and Conditions

On this part we evaluation concerns for improve earlier than we dive deeper into the Improve Methodologies within the subsequent part.

Improve Issues

Variations of Hive Metastore tables is one such consideration. Hive Metastore tables, deemed for improve to Unity Catalog, may have been created with mixing and matching varieties for every parameter proven within the desk beneath. For instance, one may have created a CSV Managed Desk utilizing DBFS root location or a Parquet Exterior desk on Amazon S3 location.This part describes the parameters based mostly on which totally different variation of the tables may have been created in your

|

Parameter |

Variation |

Desk Identification Information |

|

Desk Sort |

Run |

|

|

Run |

||

|

Information Storage Location |

Run |

|

|

DBFS Mounted Cloud Object Storage |

Run |

|

|

Immediately specifying cloud storage Location (comparable to S3://, abfss:// or gs://) |

Run |

|

|

Desk file format and interface |

File codecs comparable to Delta, Parquet, Avro |

Run |

|

Interface comparable to Hive SerDe interface |

Run |

Relying on the variations of the parameters talked about above, the adopted improve methodologies may fluctuate. Particulars are mentioned within the Improve Methodologies part beneath.

One other level must be thought-about earlier than you begin the improve of HMS tables to UC in Azure Databricks:

For AZURE Cloud – Tables at present saved on Blob storage (wasb) or ADLS gen 1 (adl) must be upgraded to ADLS gen 2 (abfs). In any other case it’s going to elevate an error if you happen to attempt to use unsupported Azure cloud storage with Unity Catalog.

Error instance: Desk will not be eligible for an improve from Hive Metastore to Unity Catalog. Motive: Unsupported file system scheme wasbs.

Improve Conditions

Earlier than beginning the improve course of, the storage credentials and exterior places must be created as proven within the steps beneath.

- Create Storage Credential(s) with entry to the goal cloud storage.

- Create Exterior Location(s) pointing to the goal cloud storage utilizing the storage credential(s).

- The Exterior Areas are used for creating UC Exterior Tables, Managed Catalogs, or Managed schemas.

Improve Methodologies

On this part we present you all of the totally different improve choices within the type of a matrix. We additionally use diagrams to point out the steps concerned in upgrading.

There are two main strategies for upgrading, utilizing SYNC (for supported eventualities) or utilizing information replication (the place SYNC will not be supported).

- Utilizing SYNC – For all of the supported eventualities (as proven within the Improve Matrix part beneath) use SYNC to improve HMS tables to UC. Utilizing SYNC means that you can improve tables with out information replication

- Utilizing Information Replication – For all unsupported eventualities (as proven within the Improve Matrix part beneath) use both Create Desk As Choose (CTAS) or DEEP CLONE*. This methodology would require information replication

*Be aware – Think about using deep clone for HMS Parquet and Delta tables to repeat the info and improve tables in UC from HMS. Use Create Desk As Choose (CTAS) for different file codecs.

The diagrams beneath describes the improve steps for every methodology. To know which methodology to make use of to your improve use case, discuss with the Improve Matrix part beneath.

Pictorial Illustration of improve

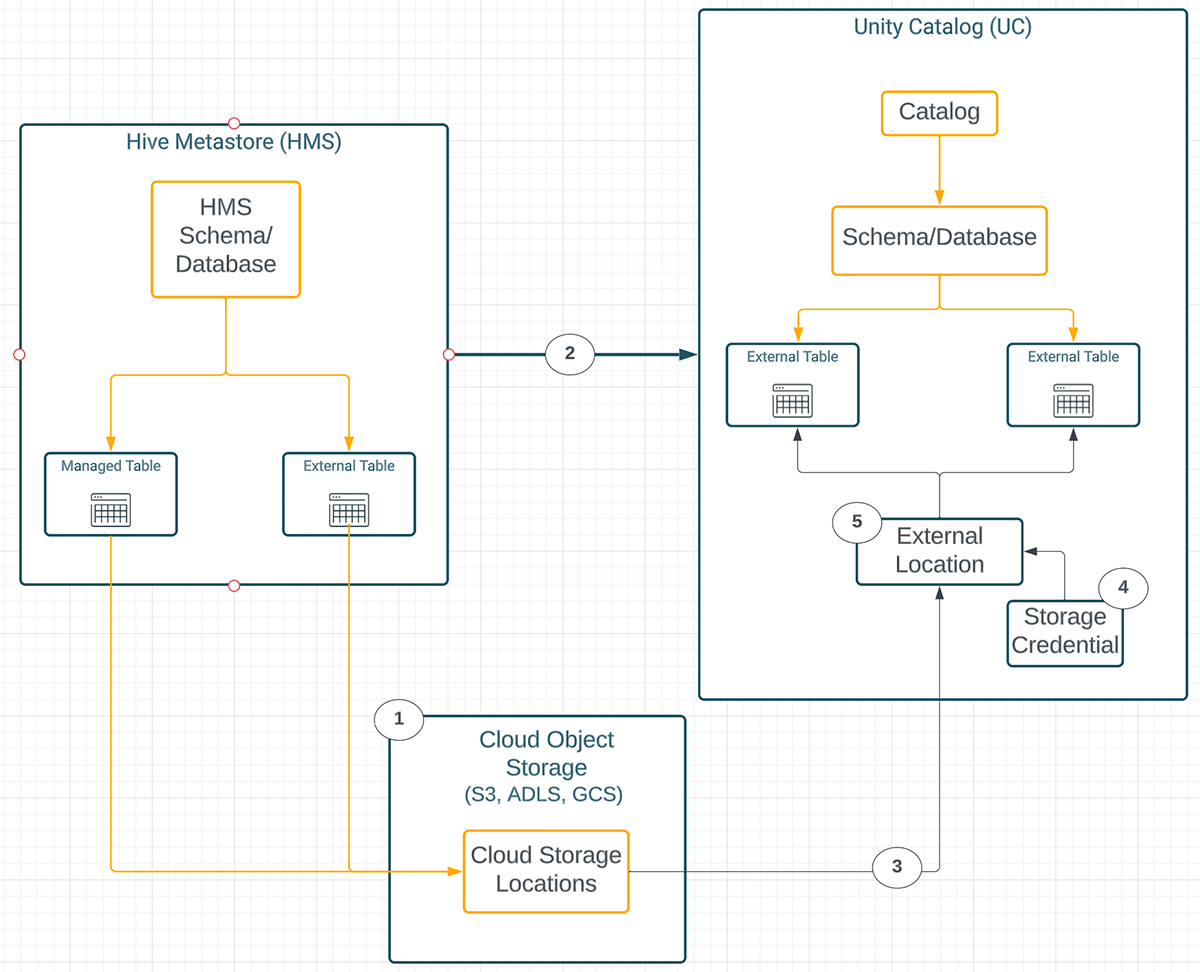

Diagram 1 – Upgrading HMS tables to UC utilizing SYNC (with out information replication)

Diagram Keys:

- HMS Managed and exterior tables retailer information as a listing of information on cloud object storage

- SYNC Command is used to improve desk metadata from HMS to UC. Goal UC tables are Exterior regardless of the supply HMS desk varieties.

- No Information is copied when the SYNC command is used for upgrading tables from HMS to UC. Similar underlying cloud storage location (utilized by the supply HMS desk) is referred to by the goal UC Exterior desk.

- A storage credential represents an authentication and authorization mechanism for accessing information saved in your cloud tenant.

- An exterior location is an object that mixes a cloud storage path with a storage credential that authorizes entry to the cloud storage path.

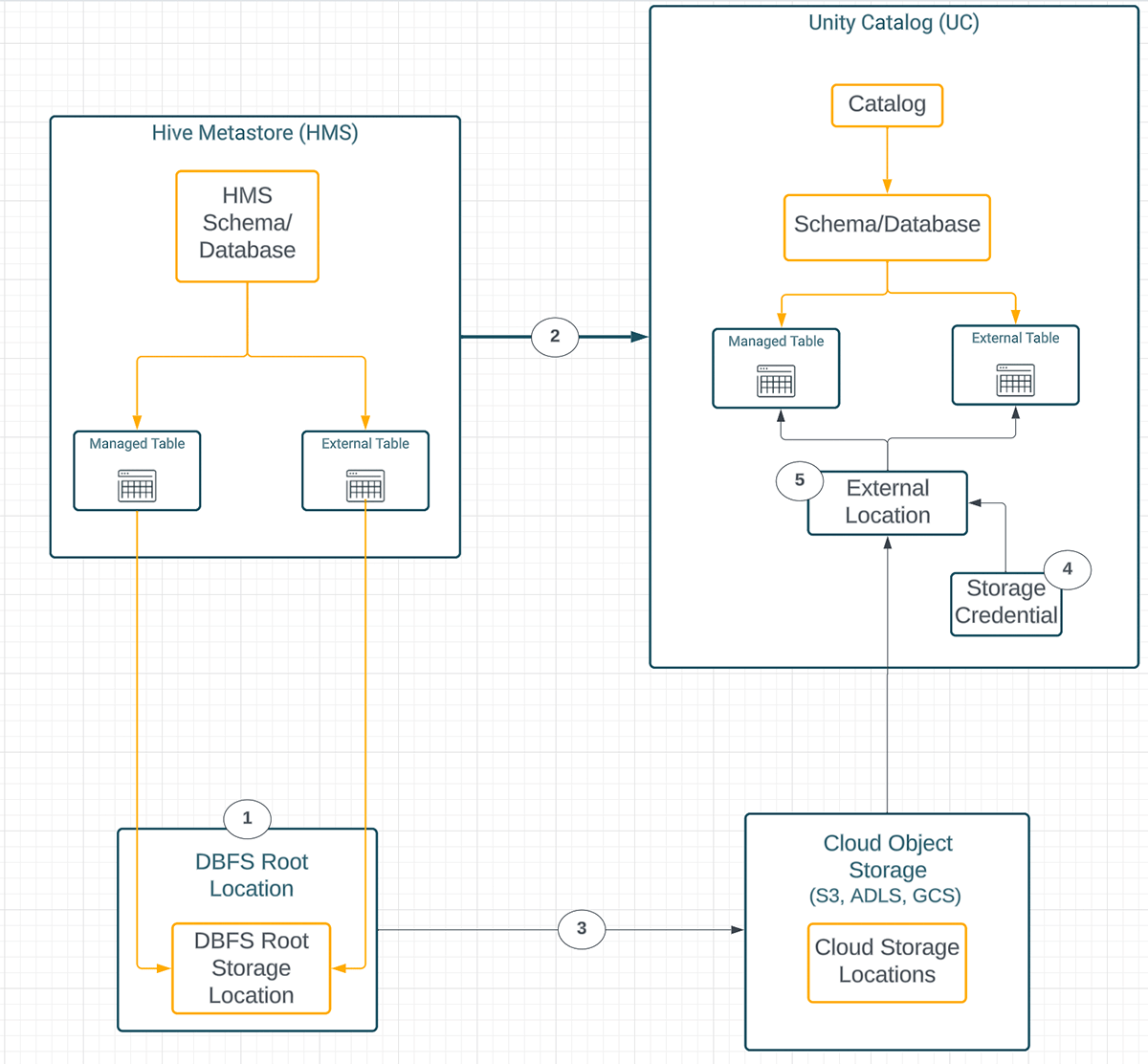

Diagram 2 – Upgrading HMS tables to UC with information replication

Diagram Keys:

- HMS Managed and exterior tables retailer information as a listing of information on DBFS Root Storage Location.

- CTAS or Deep Clone creates the UC goal desk metadata from the HMS desk. One can select to improve to an exterior or managed desk regardless of the HMS desk kind.

- CTAS or Deep Clone copies information from DBFS root storage to focus on cloud storage.

- A storage credential represents an authentication and authorization mechanism for accessing information saved in your cloud tenant.

- An exterior location is an object that mixes a cloud storage path with a storage credential that authorizes entry to the cloud storage path.

Improve Matrix

Beneath desk showcases the totally different prospects of Upgrading HMS tables to UC tables. For every situation, we offer steps that you may comply with for the improve.

HMS Storage Format utilizing DBFS Root Storage

|

Ex. |

HMS Desk Sort |

Description of HMS Desk Sort |

Instance of HMS Desk |

Goal UC TableType |

Goal UC Information File Format |

Improve Methodology |

|---|---|---|---|---|---|---|

|

1 |

Managed |

The info information for the managed tables reside inside DBFS Root (the default location for the Databricks managed HMS database). |

%sql create desk if not exists hive_metastore.hmsdb_upgrade_db.people_parquet |

Exterior Or Managed |

Delta is the popular file format for each Managed and Exterior Tables. Exterior tables do assist non-delta file codecs.1 |

CTAS or Deep Clone |

|

2 |

Exterior |

This implies the info information for the Exterior tables reside inside DBFS Root. The desk definition has the “Location” clause which makes the desk exterior. |

%sql create desk if not exists hive_metastore.hmsdb_upgrade_db.people_parquet |

Exterior Or Managed |

Delta is the popular file format for each Managed and Exterior Tables. Exterior tables do assist non-delta file codecs. 1 |

CTAS or Deep Clone |

1. Be aware – Ideally change it to Delta whereas Upgrading with CTAS.

HMS Hive SerDe desk

|

Ex. |

H MS Desk Sort |

Description of HMS Desk Sort |

Instance of HMS Desk |

Goal UC TableType |

Goal UC Information File Format |

Improve Methodology |

|---|---|---|---|---|---|---|

|

3 |

Hive SerDe Exterior or Managed 2 |

These are the tables created utilizing the Hive SerDe interface. Seek advice from this hyperlink to study extra about hive tables on databricks. |

%sql CREATE TABLE if not exists hive_metastore.hmsdb_upgrade_db.parquetExample (id int, identify string) |

Exterior Or Managed |

Delta is the popular file format for each Managed and Exterior Tables. Exterior tables do assist non-delta file codecs. 3 |

CTAS or Deep Clone |

2. Be aware – regardless of the underlying storage format, hive SerDe follows the identical improve path..

3. Be aware – Ideally change it to Delta while you’re doing the improve utilizing CTAS.

HMS Storage Format utilizing DBFS Mounted Storage

|

Ex. |

HMS Desk Sort |

Description of HMS Desk Sort |

Instance of HMS Desk |

Goal UC TableType |

Goal UC Information File Format |

Improve Methodology |

|---|---|---|---|---|---|---|

|

4 |

Managed |

That is when the dad or mum database has its location set to exterior paths, e.g., a mounted path from the item retailer. The desk is created with no location clause and desk information is saved beneath that default database path. |

%sql createtableifnotexists hive_metastore.hmsdb_upgrade_db.people_delta |

Exterior |

As that of the HMS supply information file format |

|

|

5 |

Managed |

Managed |

Delta |

CTAS or Deep Clone |

||

|

6 |

Exterior |

The desk is created with a location clause and a path specifying a mounted path from a cloud object retailer. |

%sql create desk if not exists hive_metastore.hmsdb_upgrade_db.people_delta |

Exterior |

As that of the HMS supply information file format |

|

|

7 |

Exterior |

Managed |

Delta |

CTAS or Deep Clone |

4. Be aware – Be sure that the HMS desk is dropped individually after conversion to an exterior desk. If the HMS database/schema was outlined with a location and if the database is dropped with the cascade possibility, then the underlying information can be misplaced and the upgraded UC tables will lose the info..

HMS Storage Format utilizing Cloud Object Storage

|

Ex. |

HMS Desk Sort |

Description of HMS Desk Sort |

Instance of HMS Desk |

Goal UC TableType |

Goal UC Information File Format |

Improve Methodology |

|---|---|---|---|---|---|---|

|

8 |

Managed |

The dad or mum database has its location set to exterior paths, e.g., a cloud object retailer. The desk is created with no location clause and desk information is saved beneath that default database path. |

%sql create database if not exists hive_metastore.hmsdb_upgrade_db location “s3://databricks-dkushari/hmsdb_upgrade_db/”; create desk if not exists hive_metastore.hmsdb_upgrade_db.people_delta |

Extern al |

As of the supply information file format |

|

|

9 |

Managed |

Managed |

Delta |

CTAS or Deep Clone |

||

|

10 |

Exterior |

The desk is created with a location clause and a path specifying a cloud object retailer. |

%sql create desk if not exists hive_metastore.hmsdb_upgrade_db.people_delta |

Exterior |

As of the supply information file format |

|

|

11 |

Exterior |

Managed |

Delta |

CTAS or Deep Clone |

Examples of improve

On this part, we’re offering a Databricks Pocket book with examples for every situation mentioned above.

Conclusion

On this weblog, we’ve proven how one can improve your Hive metastore tables to Unity Catalog metastore. Please discuss with the Pocket book to strive totally different improve choices. It’s also possible to discuss with the Demo Middle to get began with automating the improve course of. To automate upgrading Hive Metastore tables to Unity Catalog we suggest you employ this Databricks Lab repository.

Improve your tables to Unity Catalog at the moment and profit from unified governance options. After Upgrading to UC, you may drop Hive metastore schemas and tables if you happen to not want them. Dropping an exterior desk doesn’t modify the info information in your cloud tenant. Take precautions (as described on this weblog) whereas dropping managed tables or schemas with managed tables.

Appendix

import org.apache.spark.sql.catalyst.catalog.{CatalogTable,

CatalogTableType}

import org.apache.spark.sql.catalyst.TableIdentifier

val tableName = "desk"

val dbName = "dbname"

val oldTable: CatalogTable =

spark.sessionState.catalog.getTableMetadata(TableIdentifier(tableName,

Some(dbName)))

val alteredTable: CatalogTable = oldTable.copy(tableType =

CatalogTableType.EXTERNAL)

spark.sessionState.catalog.alterTable(alteredTable)