Tim Berners-Lee’s legacy because the creator of the World Large Net is firmly cemented in historical past. However because the Web strayed away from its early egalitarian roots in the direction of one thing far more huge and company, Berners-Lee determined a radical new method to knowledge possession was wanted, which is why he co-founded an organization referred to as Inrupt.

As a substitute of getting your knowledge (i.e. knowledge about you) saved throughout an unlimited array of various company databases, Berners-Lee theorized, what if every particular person might be in command of his or her personal knowledge?

That’s the core thought behind Inrupt, the corporate that Berners-Lee co-founded in 2017 with tech exec John Bruce. Inrupt is the car via which the duo hope to unfold a brand new Web protocol, dubbed the Stable protocol, which facilitates distributed knowledge possession by and for the folks.

The concept is radical in its simplicity, however has far-reaching implications, not only for guaranteeing the privateness of information, but additionally to enhance the final high quality of information for constructing AI, says David Ottenhimer, Inrupt’s vp of belief and digital ethics.

“Tim Berners-Lee had a imaginative and prescient of the Net, which he applied,” Ottenheimer tells Datanami. “After which he thought it wanted a course correction after time frame. Primarily the forces that be centralizing knowledge in the direction of themselves had been the alternative of what we began with.”

When Berners-Lee wrote the primary proposal for the World Large Net in 1989, the Web was a a lot smaller and altruistic place than it’s now. Small teams of individuals, largely scientists, used the Internet to share their work.

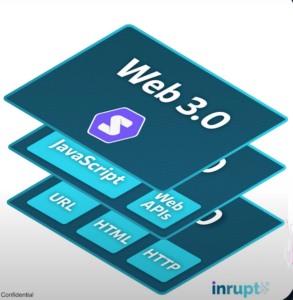

Because the Net grew extra commercialized, the static web sites of the Net 1.0 world had been now not ample for the challenges at hand, similar to the necessity to keep state for a procuring cart on an e-commerce web site. That kicked off the Net 2.0 period, characterised by extra JavaScript and APIs.

As Net corporations morphed into giants, they constructed large knowledge facilities to retailer huge quantities of person knowledge to work with their utility. Berners-Lee’s perception is that it’s terribly inefficient to have a number of, duplicate monoliths of person knowledge that aren’t even that correct. The Net 3.0 period as an alternative will usher within the age of federated knowledge storage and federated entry.

Within the Net 3.0 world that Inrupt is attempting to construct, knowledge about every particular person is saved of their very personal private knowledge retailer, or a pod. These pods might be hosted by an organization or perhaps a authorities on behalf of their residents, similar to the federal government of Flanders is at the moment doing for its 6 million residents.

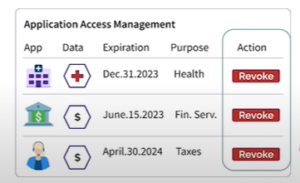

As a substitute requiring Net large to not lose or abuse billions of individuals’s knowledge, within the Inrupt scheme of issues, the person controls his or her personal knowledge by way of the pod. If a person desires to do enterprise with an organization on-line, they will grant entry to his her pod for a selected time frame, or simply for a selected sort of information. The corporate’s utility then interacts with that knowledge, in a federated method, to ship no matter service it’s.

“So as an alternative of chasing 500 variations of you across the Net and attempting to say ‘Replace my deal with, replace my title, replace my no matter,’ they arrive to you and it’s your knowledge they usually can see it one place,” Ottenheimer says.

Customers can delete firm entry to their knowledge utilizing the Stable protocol (Picture supply: Inrupt)

At a technical stage, the pods are materialized as RDF shops. In keeping with Ottenheimer, customers can retailer any sort of information they need, not simply HTML pages. “Apps can write to the info retailer with any sort of knowledge they will think about. It doesn’t must be a selected format,” he says. Whether or not it’s your poetry, the variety of chairs you’ve got in your house, your checking account information, or your healthcare file, it could possibly all be saved, secured, and accessed by way of pods and the Stable protocol.

This method brings apparent advantages to the person, who’s now empowered to handle his or her personal knowledge and grant corporations’ entry to it, if the deal is agreeable to them. It’s additionally a pure resolution for managing consent, which is a necessity on this planet of GDPR. Consent might be as granular because the person likes, they usually can cancel the consent at any time, very similar to they will merely flip off a bank card getting used to buy a service.

“The interoperability permits you to rotate to a different card. Get a brand new card, get a brand new quantity, so that you rotate your key and then you definately’re again to golden,” Ottenheimer says. “You [give consent] in a manner that is smart. You’re not giving it away perpetually, then discovering you possibly can now not get again the consent you gave years later and don’t know the place the consents are. We referred to as it the graveyard of previous consents.”

However this method additionally brings advantages to corporations, as a result of utilizing the W3C-sanctioned Stable protocol present a method to decouple knowledge, purposes, and identities. Corporations are also alleviated of the burden of getting to retailer and keep non-public and delicate knowledge in accordance with GDPR, HIPAA and different guidelines.

Plus, it yields greater high quality knowledge, Ottenheimer says.

“It’s very thrilling as a result of queries are supposed to be agile, extra real-time, as they are saying,” he says. “I keep in mind this from very huge retailers I labored with years in the past. You seize all this knowledge, you pull it in, after which there’s all types of safety necessities to forestall breaches, so that you pull issues into different databases. Now they’re out of sync, usually stale. Seven days previous is just too previous. And it’s unimaginable to get a recent sufficient set of information that’s protected sufficient. There’s all this stuff conspiring towards you to get an excellent question versus the pod mannequin, it’s inherently excessive efficiency, excessive scale, and prime quality.”

Corporations could also be detest to surrender management of information. In spite of everything, to turn into a “data-driven” firm, you kind of must be within the enterprise of storing, managing, and analyzing knowledge, proper? Nicely, Inrupt is attempting to show that assumption on its head.

In keeping with Ottenheimer, new tutorial analysis suggests that AI fashions constructed and operated in a federated mannequin atop remotely saved knowledge might outperform AI fashions constructed with a basic centrally managed datastore.

“The most recent analysis out of Oxford the truth is exhibits that it’s greater pace, greater efficiency whenever you distribute it,” he says. “In different phrases, when you run AI fashions and federated the info, you get greater efficiency and better scale, than when you attempt to pull the whole lot central and run it.”

At first, the centrally managed knowledge set will outperform the federated one. In spite of everything, the legal guidelines of physics do apply to knowledge, and geographic distance does add latency and complexity. However as updates to the info are required over time, the distributed mannequin will begin to outperform the centrally managed one, Ottenheimer says.

“At first, you’re going to get very quick outcomes for a really centralized, very giant knowledge set. You’ll be like, wow,” he says. “However then whenever you attempt to get larger and larger and larger, it falls over. And actually, it will get inaccurate and that’s the place it actually will get scary, as a result of as soon as the integrity involves bear, then how do you clear it up?

“And you’ll’t clear up large knowledge units which can be actually gradual,” he continues. “They turn into high heavy, and everyone appears to be like at them and says, I don’t perceive what’s happening inside. I don’t know what’s incorrect with them. Whereas if in case you have extremely distributed pod-based localized fashions and federated fashions utilizing federated studying, you possibly can clear it up.”

For Ottenheiner, who has labored at plenty of huge knowledge companies through the years and breaks AI fashions for enjoyable, the concept of a smaller however greater high quality knowledge set brings sure benefits.

“That huge knowledge factor that I labored on in 2012–that ship sailed,” he says. “We don’t need all the info on this planet as a result of it’s a bunch of rubbish. We wish actually good knowledge and we would like excessive efficiency via effectivity. We received’t have knowledge facilities which can be the dimensions of Sunnyvale anymore that simply fritter away all of the power. We wish tremendous high-efficiency compute.”

The pod method might additionally pay dividends within the burgeoning world of generative AI. At the moment, folks predominantly are utilizing generalized fashions, similar to OpenAI‘s ChatGPT or Amazon’s Alexa, which was just lately upgraded with GenAI capabilities. There’s a single foundational mannequin that’s been educated on a whole lot of tens of millions of previous interactions, together with the brand new ones that customers are having with it as we speak.

There many privateness and ethics challenges with GenAI. However with the pod mannequin of customized knowledge and customized fashions, customers could also be extra inclined to make use of the fashions, Ottenheimer says.

“So in a pod, you possibly can tune it, you possibly can practice the mannequin to issues which can be related to you, and you’ll handle the protection of that knowledge, so that you get the confidentiality and also you get the integrity,” he says. “Let’s say for instance, you need it to unlearn one thing. Good luck [asking] Amazon…It’s such as you spilled ink into their water. Good luck getting that again out of their studying system in the event that they don’t plan forward for that sort of downside.

“Deleting the phrase I simply mentioned, in order that it doesn’t exist within the system, is unimaginable until you begin over they usually’re not going to begin over on a large scale,” he continues. “But when they design it across the idea of a pod, in fact you can begin over. Straightforward. Finished.”

Associated Objects:

AI Ethics Points Will Not Go Away

Privateness and Moral Hurdles to LLM Adoption Develop

Zoom Knowledge Debacle Shines Gentle on SaaS Knowledge Snooping

consent, Davi Ottenheimer, federated knowledge, federated studying, federated mannequin, pod, pod mannequin, Stable protocol, Tim Berners-Lee, W3C, Net 3.0