In his 2016 guide, The Fourth Industrial Revolution, Klaus Schwab, the founding father of the World Financial Discussion board, predicted the arrival of the following technological revolution, underpinned by synthetic intelligence (AI). He argued that, like its predecessors, the AI revolution would wield world socio-economic repercussions. Schwab’s writing was prescient. In November 2022, OpenAI launched ChatGPT, a massive language mannequin (LLM) embedded with a dialog agent. The reception was phenomenal, with greater than 100 million folks accessing it through the first two months. Not solely did ChatGPT garner widespread private use, however tons of of companies promptly integrated it and different LLMs to optimize their processes and to allow new merchandise. On this weblog publish, tailored from our latest whitepaper, we study the capabilities and limitations of LLMs. In a future publish, we’ll current 4 case research that discover potential functions of LLMs.

Regardless of the consensus that this expertise revolution may have world penalties, consultants differ on whether or not the affect will likely be optimistic or destructive. On one hand, OpenAI’s said mission is to create techniques that profit all of humanity. Alternatively, following the discharge of ChatGPT, greater than a thousand researchers and expertise leaders signed an open letter calling for a six-month hiatus on the event of such techniques out of concern for societal welfare.

As we navigate the fourth industrial revolution, we discover ourselves at a juncture the place AI, together with LLMs, is reshaping sectors. However with new applied sciences come new challenges and dangers. Within the case of LLMs, these embrace disuse—the untapped potential of opportune LLM functions; misuse—dependence on LLMs the place their utilization could also be unwarranted; and abuse—exploitation of LLMs for malicious intent. To harness some great benefits of LLMs whereas mitigating potential harms, it’s crucial to deal with these points.

This publish begins by describing the elemental ideas underlying LLMs. We then delve into a variety of sensible functions, encompassing information science, coaching and schooling, analysis, and strategic planning. Our goal is to display excessive leverage use-cases and determine methods to curtail misuse, abuse, and disuse, thus paving the best way for extra knowledgeable and efficient use of this transformative expertise.

The Rise of GPT

On the coronary heart of ChatGPT is a sort of LLM referred to as a generative pretrained transformer (GPT). GPT-4 is the fourth in a sequence of GPT foundational fashions tracing again to 2018. Like its predecessor, GPT-4 can settle for textual content inputs, resembling questions or requests, and produce written responses. Its competencies replicate the large corpora of information that it was educated with. GPT-4 reveals human-level efficiency on tutorial {and professional} benchmarks together with the Uniform Bar Examination, LSAT, SAT, GRE, and AP topic assessments. Furthermore, GPT-4 performs effectively on pc coding issues, commonsense reasoning, and sensible duties that require synthesizing info from many various sources. GPT-4 outperforms its predecessor in all these areas. Much more considerably, GPT-4 is multimodal, which means that it will possibly settle for each textual content and picture inputs. This functionality permits GPT-4 to be utilized to thoroughly new issues.

OpenAI facilitated public entry to GPT-4 by way of a chatbot named ChatGPT Plus, providing no-code choices for using GPT-4. They additional prolonged its attain by releasing a GPT-4 plugin, offering low-code choices for integrating it into enterprise functions. These strikes by OpenAI have considerably lowered the obstacles to adopting this transformative expertise. Concurrently, the emergence of open-source GPT-4 options resembling LLaMA and Alpaca has catalyzed widespread experimentation and prototyping.

What are LLMs?

Though GPT-4 is new, language fashions aren’t. The complexity and richness of language is likely one of the distinguishing traits of human cognition. Because of this, AI researchers have lengthy tried to emulate human language utilizing computer systems. Certainly, pure language processing (NLP) may be traced to the origins of AI. Within the 1950 article “Computing Equipment and Intelligence,” Alan Turning included automated era and understanding of human language as a criterion for AI.

Within the years since, NLP has undergone a number of paradigm shifts. Early work on symbolic NLP relied on hand-crafted guidelines. This reliance gave method to statistical NLP within the Nineteen Nineties, which used machine studying to deduce techniques of language a lot as people do. Advances in computing {hardware} and algorithms led to neural NLP within the 2010s. The household of strategies used for neural NLP—collectively often called deep studying—are extra versatile and expressive than ones used for statistical NLP. Additional advances in deep studying, together with the applying of those strategies to datasets a number of orders of magnitude bigger than what was beforehand doable, have now allowed pre-trained LLMs to fulfill the criterion issued by Turing in his seminal work on AI.

Basically, right this moment’s language fashions share an goal of historic NLP approaches—to foretell the following phrase(s) in a sequence. This enables the mannequin to ‘rating’ the likelihood of various combos of phrases making up sentences. On this manner, language fashions can reply to human prompts in syntactically and semantically right methods.

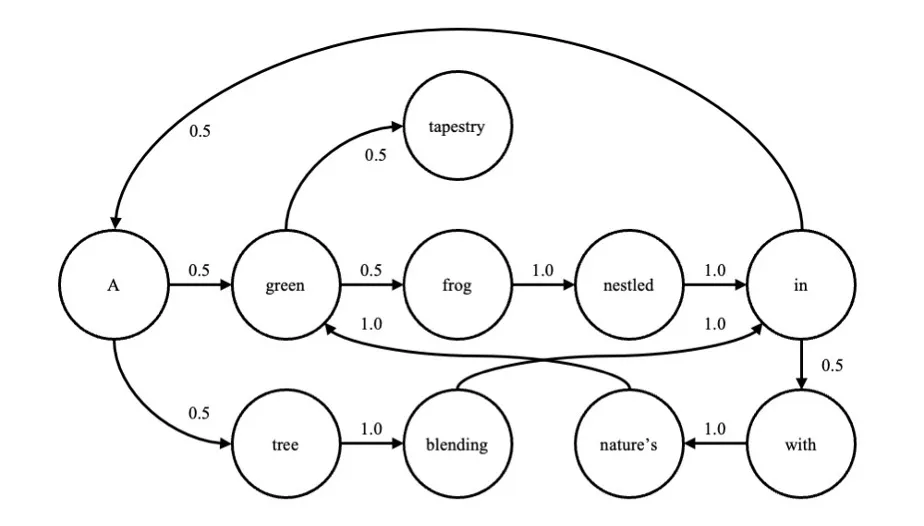

Take into account the sentence, “A inexperienced frog nestled in a tree, mixing in with nature’s inexperienced tapestry.” By merging repeated phrases, it’s doable to create a graph of transition chances; that’s, the likelihood of every phrase given the earlier one (Determine 1).

Determine 1: Easy Language Mannequin Representing Phrase Transition Possibilities

The graph may be expressed as

P(xn|xn-1).

These transition chances may be instantly approximated from a big corpus of textual content. In fact, the likelihood of every phrase is dependent upon extra than simply the earlier one. A extra full mannequin would calculate the likelihood given the earlier n phrases. For instance, for n = 10, this may be expressed as

P(xn|xn-1, xn-2, xn-3, xn-4, xn-5, xn-6, xn-7, xn-8, xn-9, xn-10).

Herein lies the problem. Because the size of the sequence will increase, the transition matrix turns into intractably massive. Furthermore, solely a small fraction of doable combos of phrases has occurred or will ever happen.

Neural networks are common operate approximators. Given sufficient hidden layers and models, a neural community can be taught to approximate an arbitrarily advanced operate, together with capabilities just like the one proven above. This property of neural networks has led to their use in LLMs.

Elements of an LLM

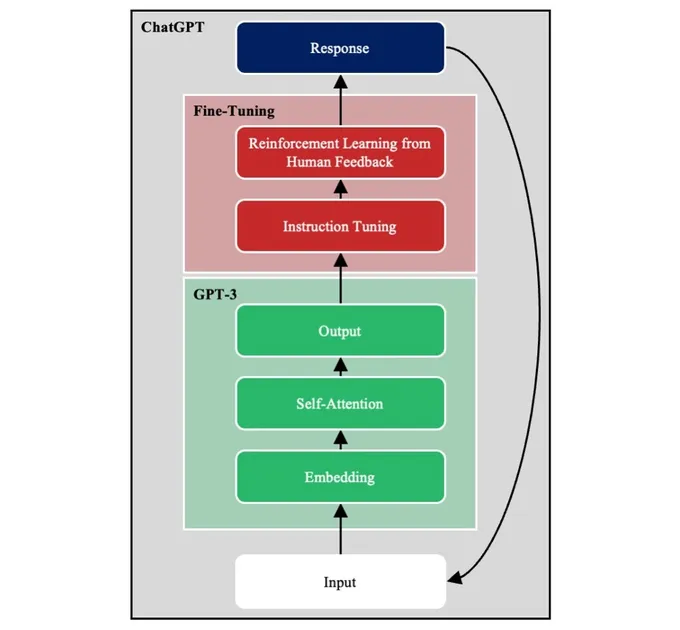

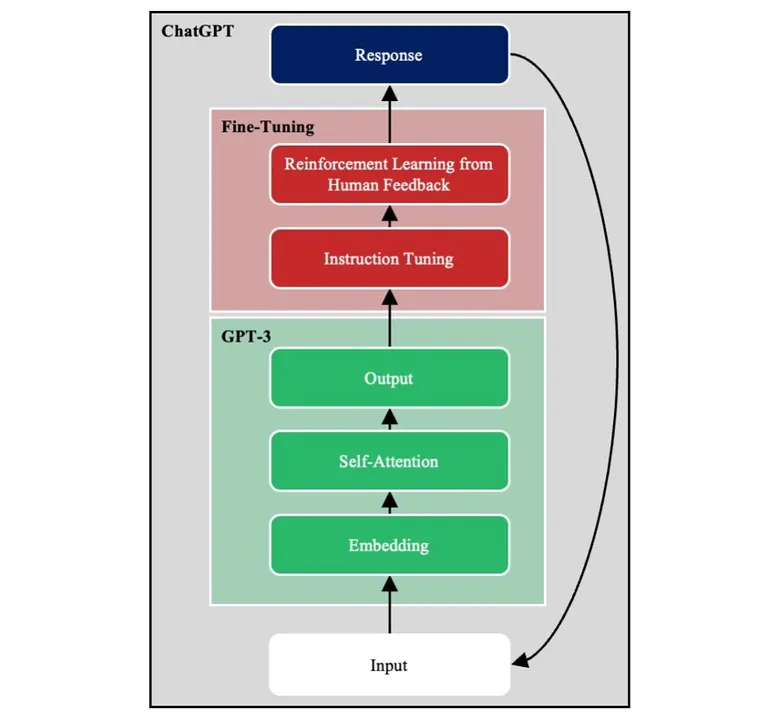

Determine 2 reveals main elements of GPT-3, an exemplar LLM embedded within the conversational agent ChatGPT. We talk about every of those elements in flip.

Determine 2: Primary Components of an LLM

Deep Neural Community

GPT-3 is a deep neural community (DNN). Its enter layer takes a passage of textual content damaged down into word-level tokens, and its output layer returns the chances of all doable phrases showing subsequent. The enter is reworked throughout a sequence of hidden layers to provide the output.

GPT-3 is educated utilizing self-supervised studying. Passages from the coaching corpus are given as enter with the ultimate phrase masked, and the mannequin should predict the masked phrase. As a result of self-supervised studying doesn’t require labeled outputs, it permits fashions to be educated on vastly extra information than could be doable utilizing supervised studying.

Textual content Embeddings

The English language alone incorporates tons of of hundreds of phrases. GPT-3, like most different NLP fashions, initiatives phrase tokens onto embeddings. An embedding is a high-dimensional numeric vector that represents a phrase. At first look, it might appear expensive to symbolize every phrase as a vector of tons of of numeric components. In actual fact, this course of compresses the enter from tons of of hundreds of phrases to the tons of of dimensions that comprise the embedding house. Embeddings scale back the variety of downstream mannequin weights that should be realized. Furthermore, they protect similarities between phrases. Thus, the phrases Web and internet are nearer to at least one one other within the embedding house than to the phrase plane.

Self-Consideration

GPT-3 is a transformer structure, which means that it makes use of self-attention. Take into account the 2 sentences, “The investor went to the financial institution” and “The bear went to the financial institution.” The which means of financial institution is dependent upon whether or not the second phrase is investor or bear. To interpret the ultimate phrase, the mannequin should relate it to different phrases contained within the sentence.

Following the introduction of the transformer structure in 2017, self-attention has develop into the popular method to seize long-range dependencies in language.[1] Self-attention copies the numeric vectors representing every phrase. Then, utilizing a set of realized attentional weights, it adjusts the values for phrases based mostly on the encompassing ones (i.e., context). For instance, self-attention shifts the numeric illustration of financial institution towards a monetary establishment within the first sentence, and it shifts it towards a river within the second sentence.

Autoregression

GPT is an autoregressive mannequin (i.e., it’s educated to foretell the following phrase in a passage). Different LLMs are educated to foretell randomly masked phrases masked inside the physique of the passage. The selection between autoregression and masking relies upon partially on the purpose of the LLM.

With one slight adjustment, an autoregressive mannequin can be utilized for pure language era. Throughout inference, GPT-3 is run a number of instances. At every timestep, the output from the earlier timestep is appended to the enter, and GPT-3 is run once more. On this manner, GPT-3 completes its personal utterances.

Instruction Tuning

GPT-3 is educated to finish passages. Consequently, when GPT-3 receives the immediate, Write an essay on rising applied sciences that can remodel manufacturing, GPT-3 could reply, The essay should include 500 phrases. This response could be anticipated if the coaching set included descriptions of faculty assignments. The difficulty is that sentence completion will not be clearly aligned with the human intent of query answering.

To beat this limitation, GPT-3 is augmented with instruction tuning. Particularly, a gaggle of human consultants create pairs of prompts and idealized responses. This new coaching set is used for supervised fine-tuning (SFT). SFT higher aligns GPT-3 with human intentions.

Reinforcement Studying from Human Suggestions

Following SFT, extra fine-tuning is carried out utilizing reinforcement studying from human suggestions (RLHF). In brief, GPT-3 generates a number of doable responses to a immediate, which human raters rank from greatest to worst. These information are used to create a reward mannequin that predicts the goodness of mannequin responses. The reward mannequin is then used to coach GPT-3 at scale to provide responses higher aligned with human intent.

Probably the most direct software of SFT and RLHF is to shift GPT-3 from sentence completion to query answering. Nonetheless, system designers produce other aims, resembling minimizing using bias language. By modeling applicable responses with SFT, and by downvoting inappropriate ones with RLHF, designers can align GPT-3 to different aims, resembling eradicating bias or bigoted responses.

Engineered and Emergent Skills of LLMs

A complete analysis of GPT reveals that it continuously produces syntactically and semantically right responses throughout a variety of textual content and pc programming prompts. Thus, it will possibly encode, retrieve, and apply pre-existing data, and it will possibly talk that data in syntactically right methods.

Extra surprisingly, LLMs like GPT-3 present unanticipated emergent skills.

- in-context studying—GPT-3 considers the previous dialog to generate responses which might be aligned with the given context. Thus, relying on the previous dialog, GPT-3 can reply to the identical immediate in very alternative ways, which is named in-context studying as a result of GPT-3 modifies its habits with out requiring modifications to its pre-trained weights.

- instruction following—Instruction following is an instance of in-context studying. Take into account the 2 prompts, Write a poem; embrace flour, egg, and sugar and Write a receipt; embrace flour, eggs, and sugar. Instruction establishes context, which shapes GPT-3’s response. On this manner, GPT-3 can carry out new duties with out re-training.

- few-shot studying—Few-shot studying is one other instance of in-context studying. The person gives examples of the kinds and type of desired outputs. Examples set up context, which once more permits GPT-3 to generalize to new duties with out re-training.

Immediate engineering refers to issuing prompts in a sure method to evoke several types of response. It’s one other instance of in-context studying. Nonetheless, using SFT and RLHF in GPT-3 reduces the necessity for immediate engineering for widespread duties, resembling query answering.

Wanting Forward

The AI revolution represents an expansive evolution of machine automation, encompassing each routine and non-routine duties. Parasuraman’s cautionary notes on automation’s misuse and abuse prolong their relevance to AI, together with LLMs.

Inside our dialogue, we hinted on the potential for misuse of LLMs. For instance, human customers could inadvertently settle for factually incorrect responses from ChatGPT. Furthermore, LLMs may be topic to abuse. For instance, adversaries could use them to generate misinformation or to unleash new types of malware. To deal with these dangers, a complete danger mitigation plan should embody strategic system design, end-user coaching, testing and analysis, in addition to strong protection mechanisms. Nonetheless, though dangers may be lowered, they can’t be eradicated.

Parasuraman additionally cautioned towards disuse, or the failure to undertake automation when it might be helpful to take action. As soon as once more, this warning extends to AI. Because the AI revolution unfolds, subsequently, we should stay aware of potential harms, whereas equally recognizing and embracing the outstanding potential for societal advantages.

Within the subsequent publish in thie sequence, we’ll discover 4 case research that current highly effective alternatives for LLMs to reinforce human intelligence.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., & Amodei, D. (2020). Language fashions are few-shot learners. Advances in neural info processing techniques, 33, 1877-1901.

OpenAI. (2023). GPT-4 Technical report.

Parasuraman, R., & Riley, V. (1997). People and automation: Use, misuse, disuse, abuse. Human components, 39(2), 230-253.

Schwab, Okay. (2017). The Fourth Industrial Revolution. Crown Publishing, New York, NY

Turing, A. (1950). Computing Equipment and Intelligence. Thoughts, LI(236), 433–460.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., & Polosukhin, I. (2017). Consideration is all you want. Advances in neural info processing techniques, 30.

The Messy Center of Giant Language Fashions with Jay Palat and Rachel Dzombak