1000’s of Databricks prospects use Databricks Workflows each day to orchestrate enterprise important workloads on the Databricks Lakehouse Platform. As is commonly the case, a lot of our prospects’ use circumstances require the definition of non-trivial workflows that embrace DAGs (Directional Acyclic Graphs) with a really giant variety of duties with advanced dependencies between them. As you possibly can think about, defining, testing, managing, and troubleshooting advanced workflows is extremely difficult and time-consuming.

Breaking down advanced workflows

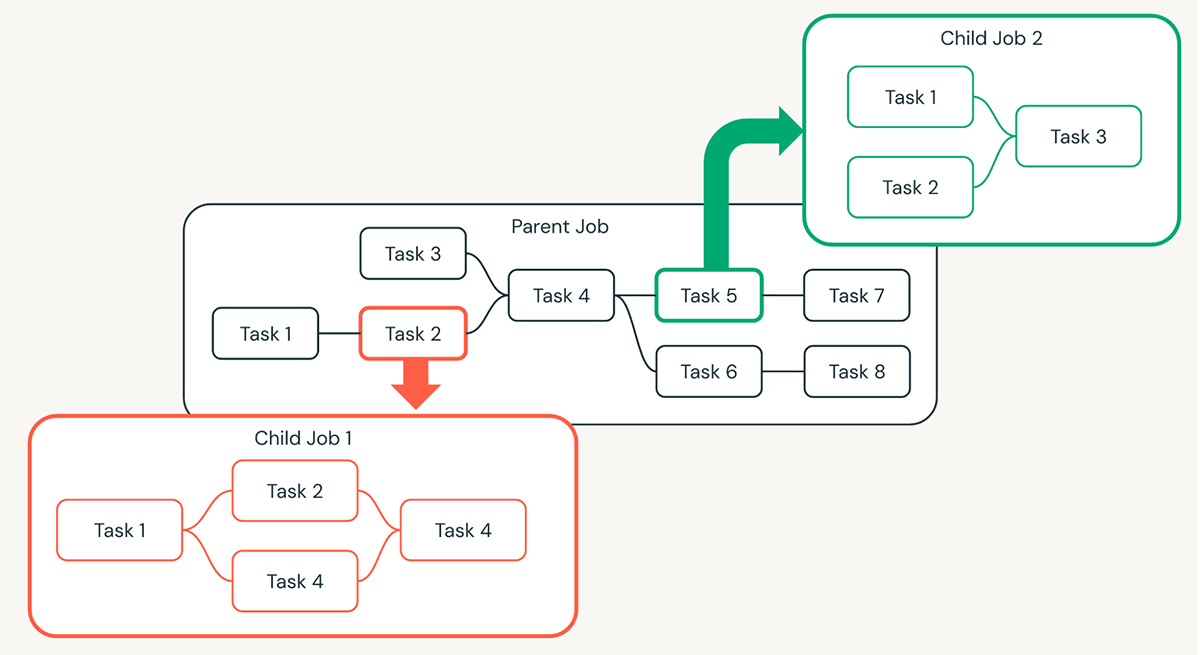

One option to simplify advanced workflows is to take a modular strategy. This entails breaking down giant DAGs into logical chunks or smaller “youngster” jobs which are outlined and managed individually. These youngster jobs can then be referred to as from the “father or mother” job making the general workflow a lot easier to understand and keep.

Why modularize your workflows?

The choice on why you need to divide the father or mother job into smaller chunks might be made primarily based on a variety of causes. By far, the most typical motive we hear about from prospects is the necessity to cut up a DAG up by organizational boundaries. This implies, permitting totally different groups in a corporation to work collectively on totally different components of a workflow. This manner, possession of components of the workflow might be higher managed, with totally different groups probably utilizing totally different code repositories for the roles they personal. Little one job possession throughout totally different groups extends to testing and updates, making the father or mother workflows extra dependable.

A further motive to contemplate modularization is reusability. When a number of workflows have frequent steps, it is smart to outline these steps in a job as soon as after which reuse that as a toddler job in numerous father or mother workflows. Through the use of parameters, reused duties might be made extra versatile to suit the wants of various father or mother workflows. Reusing jobs reduces the upkeep burden of workflows, ensures updates and bug fixes happen in a single place and simplifies advanced workflows. As we add extra management stream capabilities to workflows within the close to future, one other situation we see being helpful to prospects is looping a toddler job, passing it totally different parameters with every iteration (NOTE that looping is a sophisticated management stream characteristic you’ll hear extra about quickly. So keep tuned!)

Implementing modular workflows

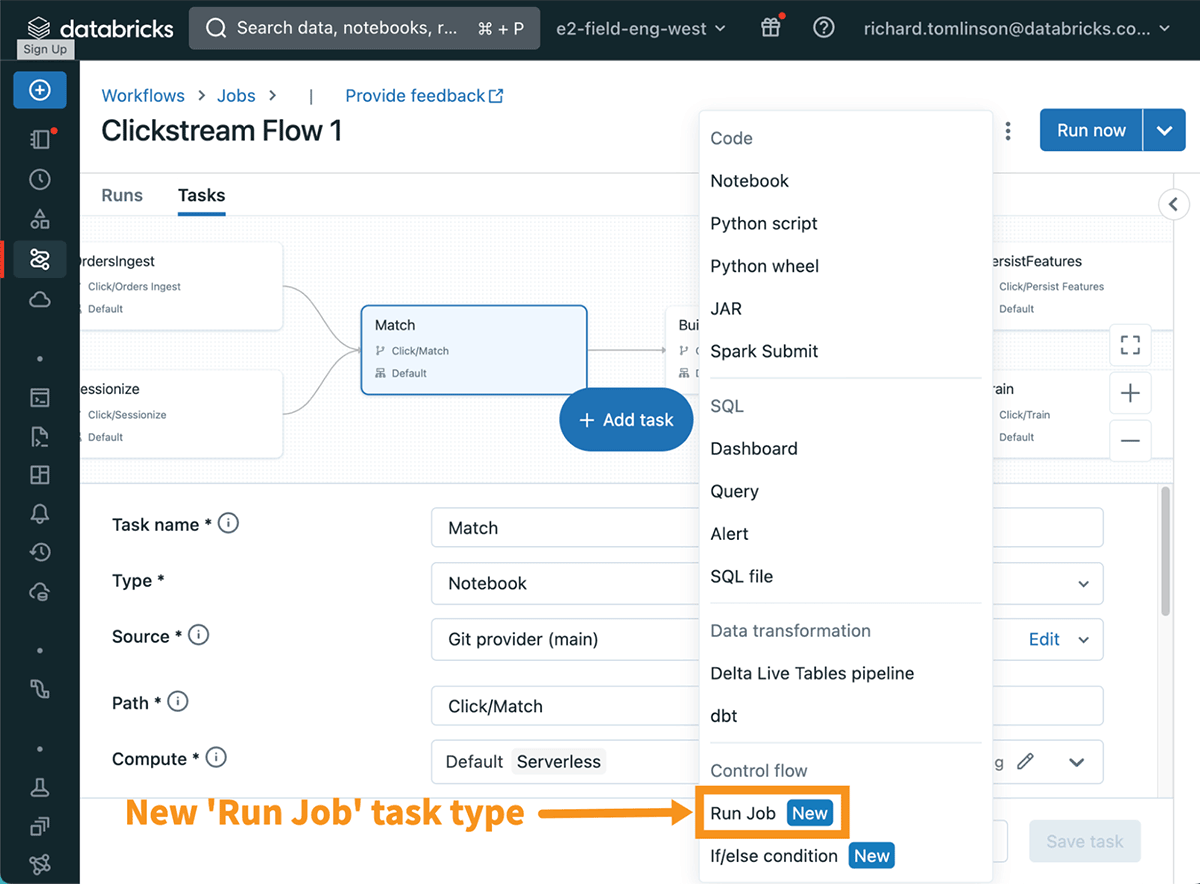

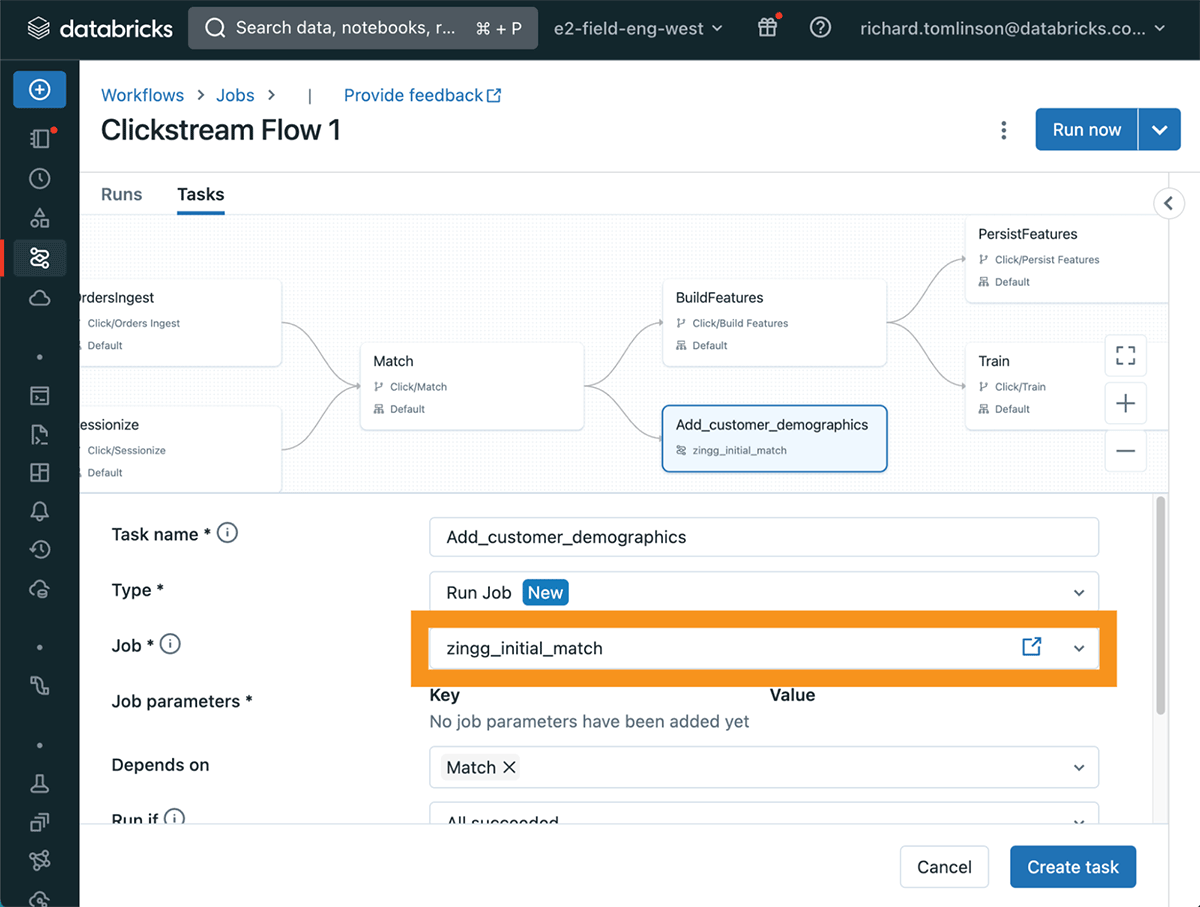

As a part of a number of new capabilities introduced throughout the latest Knowledge + AI Summit, is the power to create a brand new job kind referred to as “Run Job”. This enables Workflows customers to name a beforehand outlined job as a job and by doing so permits groups to create modular workflows.

To be taught extra in regards to the totally different job sorts and easy methods to configure them within the Databricks Workflows UI please seek advice from the product docs.

Getting began

The brand new job kind “Run Job” is now typically out there in Databricks Workflows. To get began with Workflows: