Since 2016, OSS-Fuzz has been on the forefront of automated vulnerability discovery for open supply initiatives. Vulnerability discovery is a vital a part of maintaining software program provide chains safe, so our crew is consistently working to enhance OSS-Fuzz. For the previous couple of months, we’ve examined whether or not we may enhance OSS-Fuzz’s efficiency utilizing Google’s Giant Language Fashions (LLM).

This weblog put up shares our expertise of efficiently making use of the generative energy of LLMs to enhance the automated vulnerability detection approach often known as fuzz testing (“fuzzing”). Through the use of LLMs, we’re capable of improve the code protection for crucial initiatives utilizing our OSS-Fuzz service with out manually writing further code. Utilizing LLMs is a promising new solution to scale safety enhancements throughout the over 1,000 initiatives presently fuzzed by OSS-Fuzz and to take away obstacles to future initiatives adopting fuzzing.

LLM-aided fuzzing

We created the OSS-Fuzz service to assist open supply builders discover bugs of their code at scale—particularly bugs that point out safety vulnerabilities. After greater than six years of working OSS-Fuzz, we now help over 1,000 open supply initiatives with steady fuzzing, freed from cost. Because the Heartbleed vulnerability confirmed us, bugs that could possibly be simply discovered with automated fuzzing can have devastating results. For many open supply builders, establishing their very own fuzzing resolution may price time and assets. With OSS-Fuzz, builders are capable of combine their venture without cost, automated bug discovery at scale.

Since 2016, we’ve discovered and verified a repair for over 10,000 safety vulnerabilities. We additionally imagine that OSS-Fuzz may possible discover much more bugs with elevated code protection. The fuzzing service covers solely round 30% of an open supply venture’s code on common, which means that a big portion of our customers’ code stays untouched by fuzzing. Latest analysis means that the best solution to improve that is by including further fuzz targets for each venture—one of many few elements of the fuzzing workflow that isn’t but automated.

When an open supply venture onboards to OSS-Fuzz, maintainers make an preliminary time funding to combine their initiatives into the infrastructure after which add fuzz targets. The fuzz targets are capabilities that use randomized enter to check the focused code. Writing fuzz targets is a project-specific and handbook course of that’s just like writing unit assessments. The continued safety advantages from fuzzing make this preliminary funding of time price it for maintainers, however writing a complete set of fuzz targets is an robust expectation for venture maintainers, who are sometimes volunteers.

However what if LLMs may write further fuzz targets for maintainers?

“Hey LLM, fuzz this venture for me”

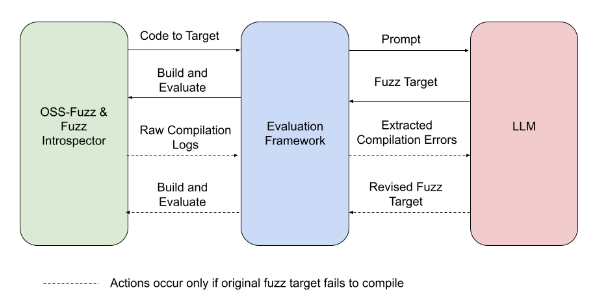

To find whether or not an LLM may efficiently write new fuzz targets, we constructed an analysis framework that connects OSS-Fuzz to the LLM, conducts the experiment, and evaluates the outcomes. The steps appear to be this:

-

OSS-Fuzz’s Fuzz Introspector instrument identifies an under-fuzzed, high-potential portion of the pattern venture’s code and passes the code to the analysis framework.

-

The analysis framework creates a immediate that the LLM will use to jot down the brand new fuzz goal. The immediate consists of project-specific info.

-

The analysis framework takes the fuzz goal generated by the LLM and runs the brand new goal.

-

The analysis framework observes the run for any change in code protection.

-

Within the occasion that the fuzz goal fails to compile, the analysis framework prompts the LLM to jot down a revised fuzz goal that addresses the compilation errors.

Experiment overview: The experiment pictured above is a totally automated course of, from figuring out goal code to evaluating the change in code protection.

At first, the code generated from our prompts wouldn’t compile; nevertheless, after a number of rounds of immediate engineering and attempting out the brand new fuzz targets, we noticed initiatives acquire between 1.5% and 31% code protection. Considered one of our pattern initiatives, tinyxml2, went from 38% line protection to 69% with none interventions from our crew. The case of tinyxml2 taught us: when LLM-generated fuzz targets are added, tinyxml2 has nearly all of its code lined.

Instance fuzz targets for tinyxml2: Every of the 5 fuzz targets proven is related to a unique a part of the code and provides to the general protection enchancment.

To duplicate tinyxml2’s outcomes manually would have required at the least a day’s price of labor—which might imply a number of years of labor to manually cowl all OSS-Fuzz initiatives. Given tinyxml2’s promising outcomes, we wish to implement them in manufacturing and to increase comparable, automated protection to different OSS-Fuzz initiatives.

Moreover, within the OpenSSL venture, our LLM was capable of mechanically generate a working goal that rediscovered CVE-2022-3602, which was in an space of code that beforehand didn’t have fuzzing protection. Although this isn’t a brand new vulnerability, it means that as code protection will increase, we’ll discover extra vulnerabilities which might be presently missed by fuzzing.

Study extra about our outcomes via our instance prompts and outputs or via our experiment report.

The aim: totally automated fuzzing

Within the subsequent few months, we’ll open supply our analysis framework to permit researchers to check their very own automated fuzz goal era. We’ll proceed to optimize our use of LLMs for fuzzing goal era via extra mannequin finetuning, immediate engineering, and enhancements to our infrastructure. We’re additionally collaborating intently with the Assured OSS crew on this analysis with the intention to safe much more open supply software program utilized by Google Cloud clients.

Our long run objectives embody:

-

Including LLM fuzz goal era as a totally built-in function in OSS-Fuzz, with steady era of recent targets for OSS-fuzz initiatives and 0 handbook involvement.

-

Extending help from C/C++ initiatives to further language ecosystems, like Python and Java.

-

Automating the method of onboarding a venture into OSS-Fuzz to remove any want to jot down even preliminary fuzz targets.

We’re working in the direction of a way forward for customized vulnerability detection with little handbook effort from builders. With the addition of LLM generated fuzz targets, OSS-Fuzz can assist enhance open supply safety for everybody.