Cloudflare introduced on Might 15, 2023 a brand new suite of zero-trust safety instruments for firms to leverage the advantages of AI applied sciences whereas mitigating dangers. The corporate built-in the brand new applied sciences to increase its current Cloudflare One product, which is a safe entry service edge zero belief network-as-a-service platform.

The Cloudflare One platform’s new instruments and options are Cloudflare Gateway, service tokens, Cloudflare Tunnel, Cloudflare Information Loss Prevention and Cloudflare’s cloud entry safety dealer.

“Enterprises and small groups alike share a typical concern: They need to use these AI instruments with out additionally creating an information loss incident,” Sam Rhea, the vp of product at Cloudflare, advised TechRepublic.

He defined that AI innovation is extra worthwhile to firms after they assist customers resolve distinctive issues. “However that always entails the possibly delicate context or information of that downside,” Rhea added.

Bounce to:

What’s new in Cloudflare One: AI safety instruments and options

With the brand new suite of AI safety instruments, Cloudflare One now permits groups of any measurement to securely use the wonderful instruments with out administration complications or efficiency challenges. The instruments are designed for firms to realize visibility into AI and measure AI instruments’ utilization, stop information loss and handle integrations.

Cloudflare Gateway

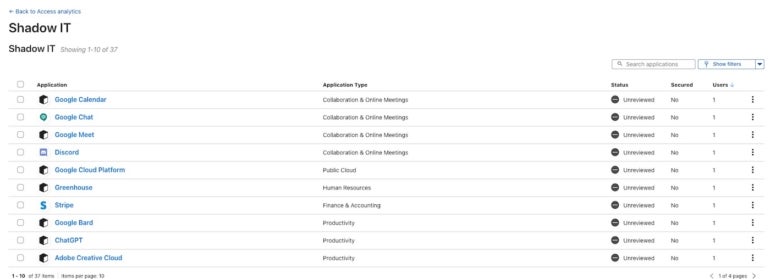

With Cloudflare Gateway, firms can visualize all of the AI apps and providers staff are experimenting with. Software program finances decision-makers can leverage the visibility to make more practical software program license purchases.

As well as, the instruments give directors vital privateness and safety data, corresponding to web visitors and risk intelligence visibility, community insurance policies, open web privateness publicity dangers and particular person gadgets’ visitors (Determine A).

Determine A

Service tokens

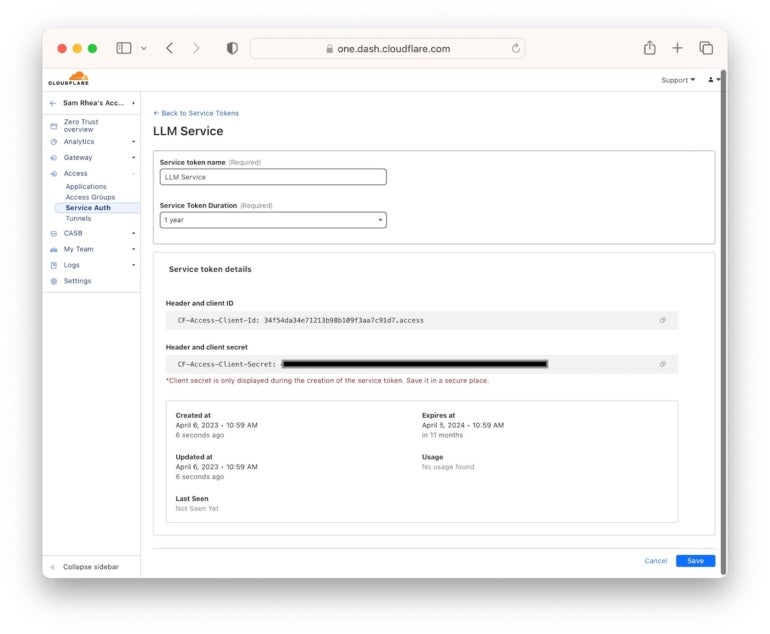

Some firms have realized that with the intention to make generative AI extra environment friendly and correct, they have to share coaching information with the AI and grant plugin entry to the AI service. For firms to have the ability to join these AI fashions with their information, Cloudflare developed service tokens.

Service tokens give directors a transparent log of all API requests and grant them full management over the particular providers that may entry AI coaching information (Determine B). Moreover, it permits directors to revoke tokens simply with a single click on when constructing ChatGPT plugins for inner and exterior use.

Determine B

As soon as service tokens are created, directors can add insurance policies that may, for instance, confirm the service token, nation, IP tackle or an mTLS certificates. Insurance policies could be created to require customers to authenticate, corresponding to finishing an MFA immediate earlier than accessing delicate coaching information or providers.

Cloudflare Tunnel

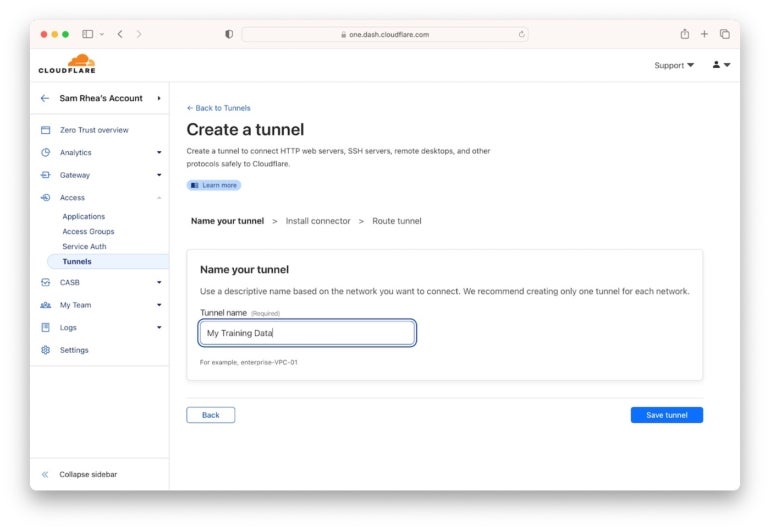

Cloudflare Tunnel permits groups to attach the AI instruments with the infrastructure with out affecting their firewalls. This instrument creates an encrypted, outbound-only connection to Cloudflare’s community, checking each request in opposition to the configured entry guidelines (Determine C).

Determine C

Cloudflare Information Loss Prevention

Whereas directors can visualize, configure entry, safe, block or permit AI providers utilizing safety and privateness instruments, human error may also play a job in information loss, information leaks or privateness breaches. For instance, staff could by chance overshare delicate information with AI fashions by mistake.

Cloudflare Information Loss Prevention secures the human hole with pre-configured choices that may verify for information (e.g., Social Safety numbers, bank card numbers, and so forth.), do customized scans, establish patterns based mostly on information configurations for a selected crew and set limitations for particular initiatives.

Cloudflare’s cloud entry safety dealer

In a latest weblog submit, Cloudflare defined that new generative AI plugins corresponding to these provided by ChatGPT present many advantages however may also result in undesirable entry to information. Misconfiguration of those purposes may cause safety violations.

Cloudflare’s cloud entry safety dealer is a brand new function that provides enterprises complete visibility and management over SaaS apps. It scans SaaS purposes for potential points corresponding to misconfigurations and alerts firms if information are by chance made public on-line. Cloudflare is engaged on new CASB integrations, which can be capable of verify for misconfigurations on new common AI providers corresponding to Microsoft’s Bing, Google’s Bard or AWS Bedrock.

The worldwide SASE and SSE market and its leaders

Safe entry service edge and safety service edge options have turn out to be more and more important as firms migrated to the cloud and into hybrid work fashions. When Cloudflare was acknowledged by Gartner for its SASE expertise, the corporate detailed in a press launch the distinction between each acronyms by explaining SASE providers lengthen the definition of SSE to incorporate managing the connectivity of secured visitors.

The SASE world market is poised to proceed rising as new AI applied sciences develop and emerge. Gartner estimated that by 2025, 70% of organizations that implement agent-based zero-trust community entry will select both a SASE or a safety service edge supplier.

Gartner added that by 2026, 85% of organizations searching for to obtain a cloud entry safety dealer, safe net gateway or zero-trust community entry choices will get hold of these from a converged answer.

Cloudflare One, which was launched in 2020, was just lately acknowledged as the one new vendor to be added to the 2023 Gartner Magic Quadrant for Safety Service Edge. Cloudflare was recognized as a distinct segment participant of the Magic Quadrant with a robust concentrate on community and nil belief. The corporate faces sturdy competitors from main firms, together with Netskope, Skyhigh Safety, Forcepoint, Lookout, Palo Alto Networks, Zscaler, Cisco, Broadcom and Iboss.

The advantages and the dangers for firms utilizing AI

Cloudflare One’s new options reply to the rising calls for for AI safety and privateness. Companies need to be productive and progressive and leverage generative AI purposes, however additionally they need to preserve information, cybersecurity and compliance in verify with built-in controls over their information movement.

A latest KPMG survey discovered that most firms imagine generative AI will considerably impression enterprise; deployment, privateness and safety challenges are top-of-mind considerations for executives.

About half (45%) of these surveyed imagine AI can hurt their organizations’ belief if the suitable danger administration instruments usually are not applied. Moreover, 81% cite cybersecurity as a high danger, and 78% spotlight information privateness threats rising from the usage of AI.

From Samsung to Verizon and JPMorgan Chase, the record of firms which have banned staff from utilizing generative AI apps continues to extend as circumstances reveal that AI options can leak smart enterprise information.

AI governance and compliance are additionally changing into more and more complicated as new legal guidelines just like the European Synthetic Intelligence Act acquire momentum and nations strengthen their AI postures.

“We hear from clients involved that their customers will ‘overshare’ and inadvertently ship an excessive amount of data,” Rhea defined. “Or they’ll share delicate data with the incorrect AI instruments and wind up inflicting a compliance incident.”

Regardless of the dangers, the KPMG survey reveals that executives nonetheless view new AI applied sciences as a chance to extend productiveness (72%), change the best way folks work (65%) and encourage innovation (66%).

“AI holds unbelievable promise, however with out correct guardrails, it may possibly create important dangers for companies,” Matthew Prince, the co-founder and chief govt officer of Cloudflare, mentioned within the press launch. “Cloudflare’s Zero Belief merchandise are the primary to offer the guard rails for AI instruments, so companies can reap the benefits of the chance AI unlocks whereas guaranteeing solely the information they need to expose will get shared.”

Cloudflare’s swift response to AI

The corporate launched its new suite of AI safety instruments at an unbelievable pace, even because the expertise remains to be taking form. Rhea talked about how Cloudflare’s new suite of AI safety instruments was developed, what the challenges had been and if the corporate is planning for upgrades.

“Cloudflare’s Zero Belief instruments construct on the identical community and applied sciences that energy over 20% of the web already by means of our first wave of merchandise like our Content material Supply Community and Net Utility Firewall,” Rhea mentioned. “We will deploy providers like information loss prevention (DLP) and safe net gateway (SWG) to our information facilities around the globe without having to purchase or provision new {hardware}.”

Rhea defined that the corporate may also reuse the experience it has in current, related features. For instance, “proxying and filtering internet-bound visitors leaving a laptop computer has plenty of similarities to proxying and filtering visitors certain for a vacation spot behind our reverse proxy.”

“In consequence, we are able to ship solely new merchandise in a short time,” Rhea added. “Some merchandise are newer — we launched the GA of our DLP answer roughly a yr after we first began constructing. Others iterate and get higher over time, like our Entry management product that first launched in 2018. Nonetheless, as a result of it’s constructed on Cloudflare’s serverless pc structure, it may possibly evolve so as to add new options in days or perhaps weeks, not months or quarters.”

What’s subsequent for Cloudflare in AI safety

Cloudflare says it’ll proceed to study from the AI house because it develops. “We anticipate that some clients will need to monitor these instruments and their utilization with a further layer of safety the place we are able to robotically remediate points that we uncover,” Rhea mentioned.

The corporate additionally expects its clients to turn out to be extra conscious of the information storage location that AI instruments used to function. Rhea added, “We plan to proceed to ship new options that make our community and its world presence prepared to assist clients preserve information the place it ought to reside.”

The challenges stay twofold for the corporate breaking into the AI safety market, with cybercriminals changing into extra subtle and clients’ wants shifting. “It’s a transferring goal, however we really feel assured that we are able to proceed to reply,” Rhea concluded.