(Michael Vi/Shutterstock)

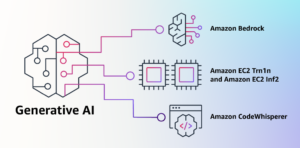

AWS has entered the red-hot realm of generative AI with the introduction of a collection of generative AI improvement instruments. The cornerstone of those is Amazon Bedrock, a device for constructing generative AI purposes utilizing pre-trained basis fashions accessible by way of an API by way of AI startups like AI21 Labs, Anthropic, and Stability AI, in addition to Amazon’s personal Titan household of basis fashions (FMs).

Bedrock gives serverless integration with AWS instruments and capabilities, enabling prospects to search out the correct mannequin for his or her wants, customise it with their information, and deploy it with out managing pricey infrastructure. Amazon states that the infrastructure supporting the Bedrock service will make use of a mixture of Amazon’s proprietary AI chips (AWS Trainium and AWS Inferentia) and GPUs from Nvidia.

AWS is positioning Bedrock as a solution to democratize FMs, as coaching these giant fashions could be prohibitively costly for a lot of firms. Working with pre-trained fashions permits companies to construct customized purposes utilizing their very own information. AWS says customization is simple with Bedrock and requires only some labeled information examples for fine-tuning a mannequin for a selected job.

Swami Sivasubramanian, VP of database, analytics, and machine studying at AWS wrote about this “democratizing strategy to generative AI” in a weblog submit asserting the brand new instruments: “We work to take these applied sciences out of the realm of analysis and experiments and prolong their availability far past a handful of startups and enormous, well-funded tech firms. That’s why right this moment I’m excited to announce a number of new improvements that can make it simple and sensible for our prospects to make use of generative AI of their companies.”

Amazon’s Titan FMs embrace a big language mannequin for textual content era duties, in addition to an embeddings mannequin for constructing purposes for personalised suggestions and search. The fashions have built-in safeguards to mitigate dangerous content material, together with filters for violent or hateful speech.

Fashions supplied by startups embrace the Jurassic-2 household of LLMs from AI21 Labs which may generate textual content in French, Spanish, Italian, German, Portuguese, and Dutch. OpenAI competitor Anthropic’s Claude mannequin can also be a part of Bedrock and can be utilized for conversational duties. Stability AI’s text-to-image mannequin, Steady Diffusion, is offered for purchasers to generate photos, artwork, logos, and designs.

AWS additionally introduced the final availability of its CodeWhisperer, an AI coding companion just like GitHub’s Copilot. AWS has additionally made CodeWhisperer free for builders with no use restrictions. There may be additionally a brand new CodeWhisperer skilled tier for enterprise customers that has options equivalent to single sign-on with AWS Id and Entry Administration integration. CodeWhisperer generates code in real-time, helps a number of languages, and could be accessed by way of varied IDEs. Examples of supported coding languages embrace SQL, Go, Rust, C, C++, and others.

Moreover, there are two new Elastic Compute Cloud situations launched for GA. There are Trn1n situations, powered by Trainium, which have 1600 Gbps of community bandwidth and 20% extra efficiency over Trn1 situations. The second new occasion to achieve GA is Inf2 situations, powered by Inferentia2. AWS says these are optimized particularly for large-scale generative AI apps with fashions containing lots of of billions of parameters, as they ship as much as 4x greater throughput and as much as 10x decrease latency in comparison with the prior era Inferentia-based situations. AWS claims this speed-up quantities to 40% higher inference value efficiency.

Bedrock is offered now in restricted preview. One buyer is Coda, maker of a collaborative doc administration platform: “As a longtime comfortable AWS buyer, we’re enthusiastic about how Amazon Bedrock can carry high quality, scalability, and efficiency to Coda AI. Since all our information is already on AWS, we’re in a position to rapidly incorporate generative AI utilizing Bedrock, with all the safety and privateness we have to defend our information built-in. With over tens of hundreds of groups operating on Coda, together with giant groups like Uber, the New York Occasions, and Sq., reliability and scalability are actually vital,” stated Shishir Mehrotra, co-founder and CEO of Coda, in Sivasubramanian’s weblog announcement.

Associated Gadgets:

AWS Strikes Up the Utility Stack

Native AI Raises $3.5M Seed for AI-powered Client Analysis

Search AI Finds $7.5M in Seed to Develop Its Generative AI Platform