| Apr 14, 2023 |

|

|

|

(Nanowerk Highlight) Part change reminiscence (PCM) is a kind of non-volatile reminiscence expertise that shops knowledge on the nanoscale by altering the section of a specialised materials between crystalline and amorphous states. Within the crystalline state, the fabric displays low electrical resistance, whereas within the amorphous state, it has excessive resistance. By making use of completely different warmth and quickly cooling pulses, the section could be switched, permitting knowledge to be written and browse as binary values (0s and 1s) or steady analog values primarily based on the fabric’s resistance.

|

|

Part change reminiscence is an rising expertise with nice potential for advancing analog in-memory computing, notably in deep neural networks and neuromorphic computing. Numerous components, corresponding to resistance values, reminiscence window, and resistance drift, have an effect on the efficiency of PCM in these functions. Thus far, it has been difficult for researchers to match PCM gadgets for in-memory computing primarily based solely on their numerous machine traits, which frequently had trade-offs and correlations.

|

|

One other problem is that analog in-memory computing can enormously enhance the velocity and scale back the facility consumption for AI computing, however it might undergo from lowered accuracy resulting from imperfection within the analog reminiscence gadgets.

|

|

New analysis, printed in Superior Digital Supplies (“Optimization of Projected Part Change Reminiscence for Analog In-Reminiscence Computing Inference”), addresses these points by 1) extensively benchmarking PCM gadgets in giant neural networks, providing beneficial tips for optimizing these gadgets sooner or later, and a pair of) bettering and optimizing analog reminiscence gadgets made with section change supplies, in the end enhancing accuracy for AI computing.

|

|

Ning Li, who on the time was working on the IBM Analysis in Yorktown Heights and Albany (now an Affiliate Professor at Lehigh College), the primary creator of the research, and his IBM colleagues clarify: “First, we found that many machine traits could be tuned systematically tuned systematically utilizing a liner layer launched in our prior work. Second, we discovered a approach to optimize these machine traits from a system viewpoint utilizing intensive system-level simulations.” These two advances collectively enabled the workforce to determine the most effective gadgets.”

|

|

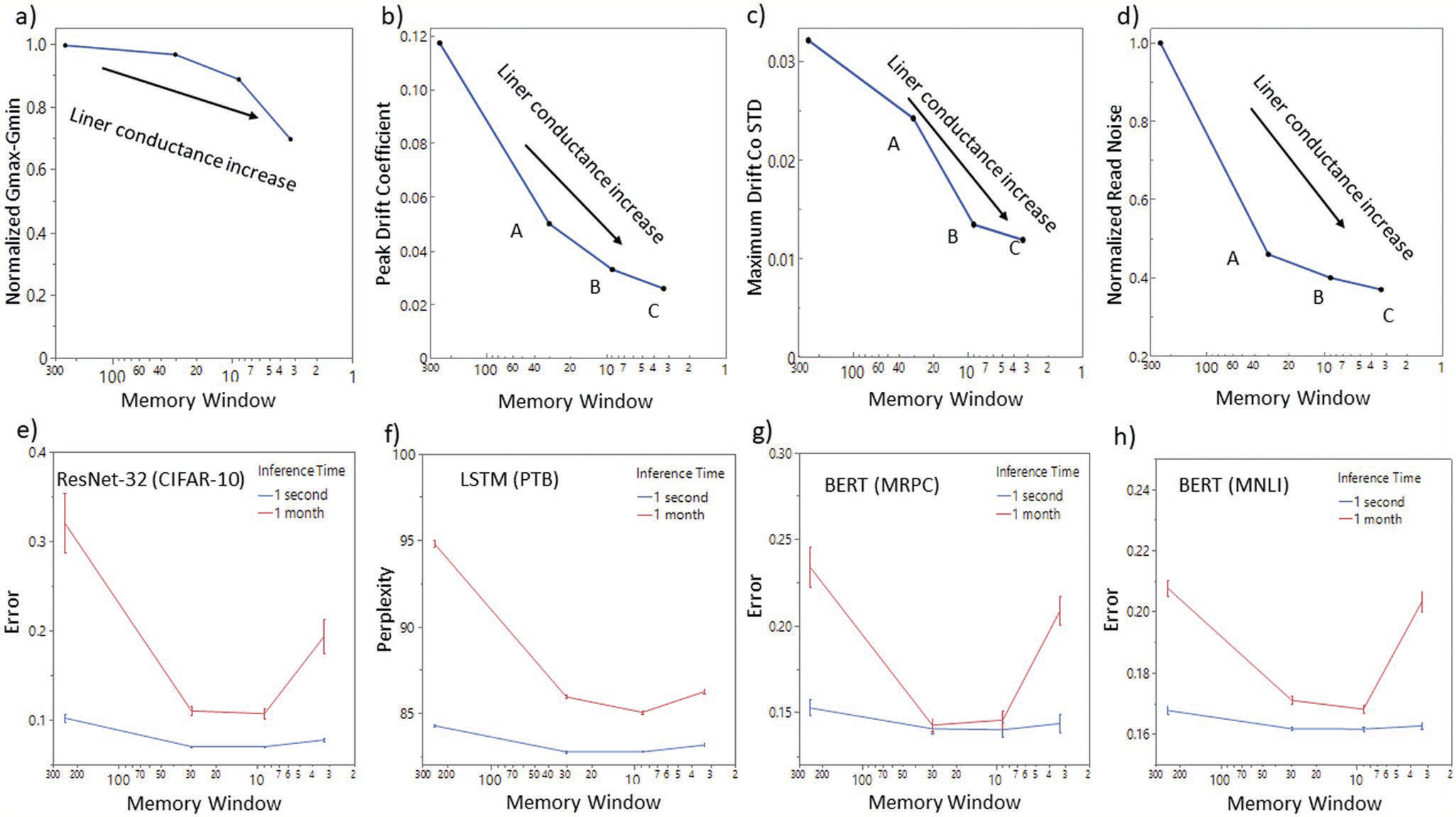

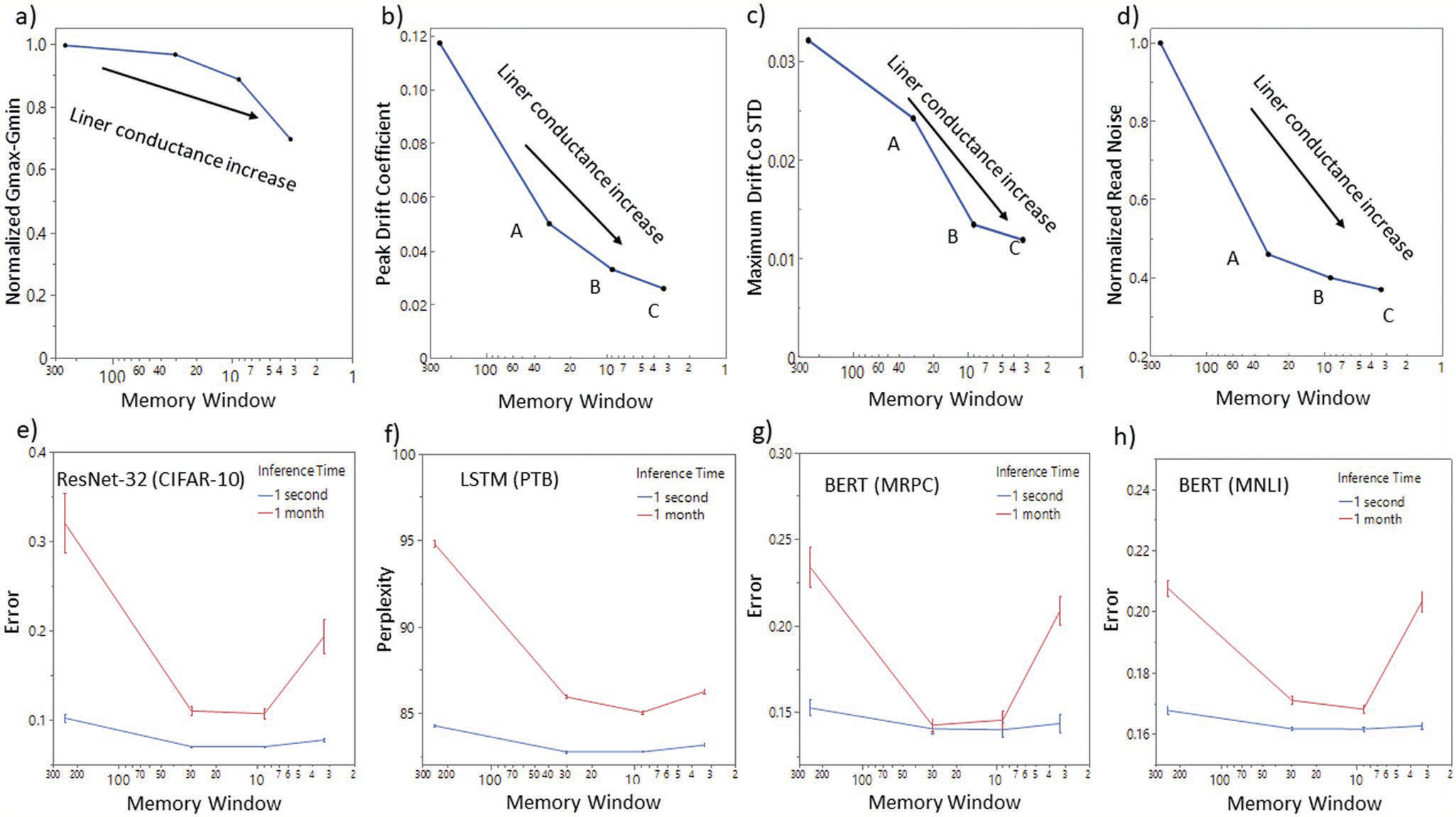

On this work, the workforce created fashions to signify the drift and noise conduct of PCM gadgets. They used these fashions to evaluate the efficiency of those gadgets in neural community inference functions. They evaluated the efficiency of enormous neural networks with tens of tens of millions of weights (i.e., the parameters inside a neural community that decide the energy of the connections between neurons; Within the case of PCM-based analog in-memory computing, the weights are saved as resistance values within the PCM gadgets) utilizing PCM gadgets each with and with out projection liners (extra layers launched into the PCM machine construction, that are fabricated from a non-phase change materials), testing a wide range of deep neural networks (DNNs) and datasets at a number of time-steps.

|

|

| Measured traits of PCM machine and their affect on community accuracy as a operate of PCM reminiscence window a) programming vary Gmax–Gmin, b) peak drift coefficient, c) commonplace deviation of drift coefficient, d) normalized learn noise, e) ResNet-32 (CIFAR-10) inference error at brief time period (1 second) and long run (1 month) after programming, f) LSTM (PTB) inference error at 1 second and 1 month after programming, g) BERT (MRPC) inference error at 1 second and 1 month after programming, h) BERT (MNLI) inference error at 1 second and 1 month after programming. (Reprinted with permission by Wiley-VCH Verlag) (click on on picture to enlarge)

|

|

The research finds that gadgets with projection liners carry out nicely throughout numerous DNN sorts, together with recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformer-based networks. The researchers additionally examined the affect of various machine traits on community accuracy and recognized a variety of goal machine specs for PCM with liners that may result in additional enhancements.

|

|

In contrast to earlier reviews on PCM gadgets for AI computing, this work ties machine outcomes to the tip outcomes of computing chips with giant and helpful deep neural networks. Dr. Li explains that PCM gadgets for in-memory computing are tough to match for AI functions by solely utilizing machine traits. The research offers an answer to this downside by providing intensive benchmarking of PCM gadgets in numerous networks underneath numerous circumstances of weight mapping and tips for PCM machine optimization.

|

|

By having the ability to present that machine traits could be tuned repeatedly, and that these traits are correlated with each other, systematic optimization of the gadgets turns into potential.

|

|

Utilizing their optimization technique, the researchers demonstrated that they will obtain significantly better accuracy for each short-term and long-term programming. They considerably lowered the results of PCM drift and noise on deep neural networks, bettering each preliminary accuracy and long-term accuracy.

|

|

“Potential functions of our work embody improved velocity, lowered energy, and lowered value in language processing, picture recognition, and even broader AI functions, corresponding to ChatGPT,” Li factors out.

|

|

On account of this work, the researchers envision that giant neural community computation will change into quicker, greener, and cheaper. The subsequent phases of their investigations embody additional optimizing PCM gadgets and implementing them in laptop chips.

|

|

“The longer term course for this analysis discipline is to allow actual merchandise that prospects discover helpful,” Li concludes. “Though analog methods use imperfect analog gadgets, they provide important benefits in velocity, energy, and price. The problem lies in figuring out appropriate functions and enabling them.”

|

By

Michael

Berger

–

Michael is creator of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Expertise,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Abilities and Instruments Making Expertise Invisible

Copyright ©

Nanowerk

|

|

|