Final Up to date on August 6, 2022

Knowledge preparation is required when working with neural networks and deep studying fashions. More and more, information augmentation can be required on extra complicated object recognition duties.

On this submit, you’ll uncover find out how to use information preparation and information augmentation together with your picture datasets when growing and evaluating deep studying fashions in Python with Keras.

After studying this submit, you’ll know:

- Concerning the picture augmentation API offered by Keras and find out how to use it together with your fashions

- The best way to carry out characteristic standardization

- The best way to carry out ZCA whitening of your photographs

- The best way to increase information with random rotations, shifts, and flips

- The best way to save augmented picture information to disk

Kick-start your mission with my new ebook Deep Studying With Python, together with step-by-step tutorials and the Python supply code information for all examples.

Let’s get began.

- Jun/2016: First revealed

- Replace Aug/2016: The examples on this submit have been up to date for the newest Keras API. The datagen.subsequent() perform was eliminated

- Replace Oct/2016: Up to date for Keras 1.1.0, TensorFlow 0.10.0 and scikit-learn v0.18

- Replace Jan/2017: Up to date for Keras 1.2.0 and TensorFlow 0.12.1

- Replace Mar/2017: Up to date for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Replace Sep/2019: Up to date for Keras 2.2.5 API

- Replace Jul/2022: Up to date for TensorFlow 2.x API with a workaround on the characteristic standardization difficulty

For an prolonged tutorial on the ImageDataGenerator for picture information augmentation, see:

Keras Picture Augmentation API

Like the remainder of Keras, the picture augmentation API is easy and highly effective.

Keras gives the ImageDataGenerator class that defines the configuration for picture information preparation and augmentation. This consists of capabilities corresponding to:

- Pattern-wise standardization

- Characteristic-wise standardization

- ZCA whitening

- Random rotation, shifts, shear, and flips

- Dimension reordering

- Save augmented photographs to disk

An augmented picture generator will be created as follows:

|

from tensorflow.keras.preprocessing.picture import ImageDataGenerator datagen = ImageDataGenerator() |

Moderately than performing the operations in your total picture dataset in reminiscence, the API is designed to be iterated by the deep studying mannequin becoming course of, creating augmented picture information for you simply in time. This reduces your reminiscence overhead however provides some extra time price throughout mannequin coaching.

After you could have created and configured your ImageDataGenerator, you could match it in your information. This may calculate any statistics required to truly carry out the transforms to your picture information. You are able to do this by calling the match() perform on the info generator and passing it to your coaching dataset.

The information generator itself is, actually, an iterator, returning batches of picture samples when requested. You’ll be able to configure the batch dimension and put together the info generator and get batches of photographs by calling the movement() perform.

|

X_batch, y_batch = datagen.movement(prepare, prepare, batch_size=32) |

Lastly, you can also make use of the info generator. As an alternative of calling the match() perform in your mannequin, you could name the fit_generator() perform and go within the information generator and the specified size of an epoch in addition to the overall variety of epochs on which to coach.

|

fit_generator(datagen, samples_per_epoch=len(prepare), epochs=100) |

You’ll be able to study extra in regards to the Keras picture information generator API within the Keras documentation.

Need assistance with Deep Studying in Python?

Take my free 2-week e mail course and uncover MLPs, CNNs and LSTMs (with code).

Click on to sign-up now and likewise get a free PDF E book model of the course.

Level of Comparability for Picture Augmentation

Now that you know the way the picture augmentation API in Keras works, let’s have a look at some examples.

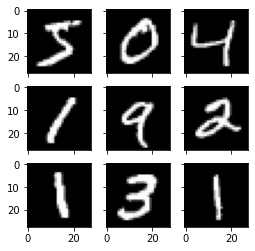

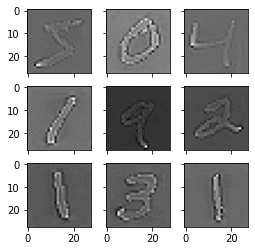

We are going to use the MNIST handwritten digit recognition job in these examples. To start with, let’s check out the primary 9 photographs within the coaching dataset.

|

# Plot photographs from tensorflow.keras.datasets import mnist import matplotlib.pyplot as plt # load dbata (X_train, y_train), (X_test, y_test) = mnist.load_data() # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_train[i*3+j], cmap=plt.get_cmap(“grey”)) # present the plot plt.present() |

Operating this instance gives the next picture that you should use as a degree of comparability with the picture preparation and augmentation within the examples under.

Instance MNIST photographs

Characteristic Standardization

It is usually doable to standardize pixel values throughout your complete dataset. That is referred to as characteristic standardization and mirrors the kind of standardization usually carried out for every column in a tabular dataset.

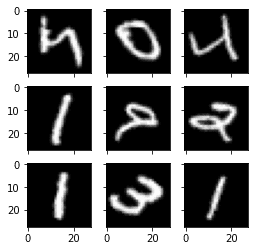

You’ll be able to carry out characteristic standardization by setting the featurewise_center and featurewise_std_normalization arguments to True on the ImageDataGenerator class. These are set to False by default. Nevertheless, the latest model of Keras has a bug within the characteristic standardization in order that the imply and customary deviation is calculated throughout all pixels. When you use the match() perform from the ImageDataGenerator class, you will note a picture just like the one above:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# Standardize photographs throughout the dataset, imply=0, stdev=1 from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(featurewise_center=True, featurewise_std_normalization=True) # match parameters from information datagen.match(X_train) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False): print(X_batch.min(), X_batch.imply(), X_batch.max()) # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j], cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

For instance, the minimal, imply, and most values from the batch printed above are:

|

-0.42407447 -0.04093817 2.8215446 |

And the picture displayed is as follows:

Picture from feature-wise standardization

The workaround is to compute the characteristic standardization manually. Every pixel ought to have a separate imply and customary deviation, and it ought to be computed throughout totally different samples however impartial from different pixels in the identical pattern. You simply want to switch the match() perform with your individual computation:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

# Standardize photographs throughout the dataset, each pixel has imply=0, stdev=1 from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(featurewise_center=True, featurewise_std_normalization=True) # match parameters from information datagen.imply = X_train.imply(axis=0) datagen.std = X_train.std(axis=0) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False): print(X_batch.min(), X_batch.imply(), X_batch.max()) # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j], cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

The minimal, imply, and most as printed now have a wider vary:

|

-1.2742625 -0.028436039 17.46127 |

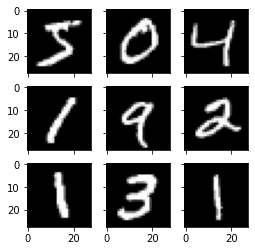

Operating this instance, you’ll be able to see that the impact is totally different, seemingly darkening and lightening totally different digits.

Standardized characteristic MNIST photographs

ZCA Whitening

A whitening rework of a picture is a linear algebraic operation that reduces the redundancy within the matrix of pixel photographs.

Much less redundancy within the picture is meant to higher spotlight the constructions and options within the picture to the educational algorithm.

Usually, picture whitening is carried out utilizing the Principal Part Evaluation (PCA) approach. Extra just lately, an alternate referred to as ZCA (study extra in Appendix A of this tech report) reveals higher leads to remodeled photographs that preserve all the unique dimensions. And in contrast to PCA, the ensuing remodeled photographs nonetheless appear like their originals. Exactly, whitening converts every picture right into a white noise vector, i.e., every aspect within the vector has zero imply and unit customary derivation and is statistically impartial of one another.

You’ll be able to carry out a ZCA whitening rework by setting the zca_whitening argument to True. However because of the identical difficulty as characteristic standardization, you could first zero-center your enter information individually:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

# ZCA Whitening from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(featurewise_center=True, featurewise_std_normalization=True, zca_whitening=True) # match parameters from information X_mean = X_train.imply(axis=0) datagen.match(X_train – X_mean) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train – X_mean, y_train, batch_size=9, shuffle=False): print(X_batch.min(), X_batch.imply(), X_batch.max()) # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j].reshape(28,28), cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

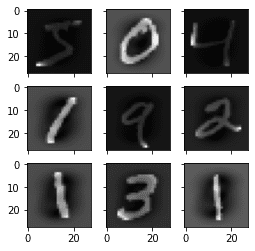

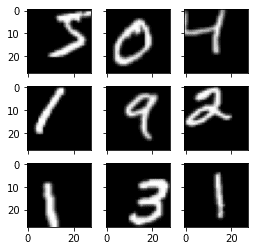

Operating the instance, you’ll be able to see the identical normal construction within the photographs and the way the define of every digit has been highlighted.

ZCA whitening MNIST photographs

Random Rotations

Generally photographs in your pattern information might have various and totally different rotations within the scene.

You’ll be able to prepare your mannequin to higher deal with rotations of photographs by artificially and randomly rotating photographs out of your dataset throughout coaching.

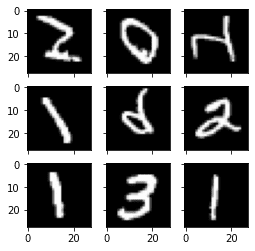

The instance under creates random rotations of the MNIST digits as much as 90 levels by setting the rotation_range argument.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# Random Rotations from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(rotation_range=90) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False): # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j].reshape(28,28), cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

Operating the instance, you’ll be able to see that photographs have been rotated left and proper as much as a restrict of 90 levels. This isn’t useful on this downside as a result of the MNIST digits have a normalized orientation, however this rework could be of assist when studying from pictures the place the objects might have totally different orientations.

Random rotations of MNIST photographs

Random Shifts

Objects in your photographs might not be centered within the body. They might be off-center in quite a lot of alternative ways.

You’ll be able to prepare your deep studying community to anticipate and presently deal with off-center objects by artificially creating shifted variations of your coaching information. Keras helps separate horizontal and vertical random shifting of coaching information by the width_shift_range and height_shift_range arguments.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Random Shifts from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation shift = 0.2 datagen = ImageDataGenerator(width_shift_range=shift, height_shift_range=shift) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False): # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j].reshape(28,28), cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

Operating this instance creates shifted variations of the digits. Once more, this isn’t required for MNIST because the handwritten digits are already centered, however you’ll be able to see how this could be helpful on extra complicated downside domains.

Random shifted MNIST photographs

Random Flips

One other augmentation to your picture information that may enhance efficiency on massive and sophisticated issues is to create random flips of photographs in your coaching information.

Keras helps random flipping alongside each the vertical and horizontal axes utilizing the vertical_flip and horizontal_flip arguments.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# Random Flips from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(horizontal_flip=True, vertical_flip=True) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False): # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j].reshape(28,28), cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

Operating this instance, you’ll be able to see flipped digits. Flipping digits shouldn’t be helpful as they may at all times have the right left and proper orientation, however this can be helpful for issues with pictures of objects in a scene that may have a assorted orientation.

Randomly flipped MNIST photographs

Saving Augmented Pictures to File

The information preparation and augmentation are carried out simply in time by Keras.

That is environment friendly when it comes to reminiscence, however you could require the precise photographs used throughout coaching. For instance, maybe you wish to use them with a distinct software program bundle later or solely generate them as soon as and use them on a number of totally different deep studying fashions or configurations.

Keras lets you save the photographs generated throughout coaching. The listing, filename prefix, and picture file sort will be specified to the movement() perform earlier than coaching. Then, throughout coaching, the generated photographs might be written to the file.

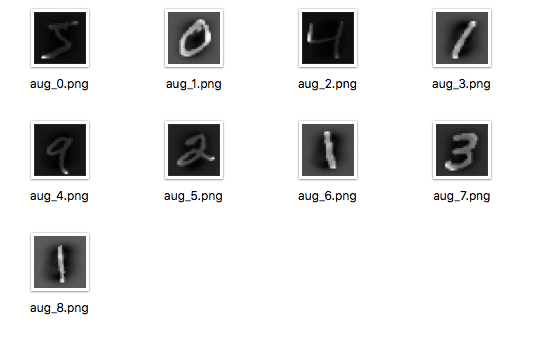

The instance under demonstrates this and writes 9 photographs to a “photographs” subdirectory with the prefix “aug” and the file sort of PNG.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Save augmented photographs to file from tensorflow.keras.datasets import mnist from tensorflow.keras.preprocessing.picture import ImageDataGenerator import matplotlib.pyplot as plt # load information (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.form[0], 28, 28, 1)) X_test = X_test.reshape((X_test.form[0], 28, 28, 1)) # convert from int to drift X_train = X_train.astype(‘float32’) X_test = X_test.astype(‘float32’) # outline information preparation datagen = ImageDataGenerator(horizontal_flip=True, vertical_flip=True) # configure batch dimension and retrieve one batch of photographs for X_batch, y_batch in datagen.movement(X_train, y_train, batch_size=9, shuffle=False, save_to_dir=‘photographs’, save_prefix=‘aug’, save_format=‘png’): # create a grid of 3×3 photographs fig, ax = plt.subplots(3, 3, sharex=True, sharey=True, figsize=(4,4)) for i in vary(3): for j in vary(3): ax[i][j].imshow(X_batch[i*3+j].reshape(28,28), cmap=plt.get_cmap(“grey”)) # present the plot plt.present() break |

Operating the instance, you’ll be able to see that photographs are solely written when they’re generated.

Augmented MNIST photographs saved to file

Suggestions for Augmenting Picture Knowledge with Keras

Picture information is exclusive in that you would be able to evaluate the info and remodeled copies of the info and shortly get an concept of how the mannequin might understand it.

Beneath are some suggestions for getting essentially the most from picture information preparation and augmentation for deep studying.

- Overview Dataset. Take a while to evaluate your dataset in nice element. Have a look at the photographs. Be aware of picture preparation and augmentations that may profit the coaching strategy of your mannequin, corresponding to the necessity to deal with totally different shifts, rotations, or flips of objects within the scene.

- Overview Augmentations. Overview pattern photographs after the augmentation has been carried out. It’s one factor to intellectually know what picture transforms you’re utilizing; it’s a very totally different factor to take a look at examples. Overview photographs each with particular person augmentations you’re utilizing in addition to the complete set of augmentations you propose to make use of. You may even see methods to simplify or additional improve your mannequin coaching course of.

- Consider a Suite of Transforms. Strive multiple picture information preparation and augmentation scheme. Typically you will be shocked by the outcomes of a knowledge preparation scheme you didn’t suppose can be useful.

Abstract

On this submit, you found picture information preparation and augmentation.

You found a variety of strategies you should use simply in Python with Keras for deep studying fashions. You discovered about:

- The ImageDataGenerator API in Keras for producing remodeled photographs simply in time

- Pattern-wise and Characteristic-wise pixel standardization

- The ZCA whitening rework

- Random rotations, shifts, and flips of photographs

- The best way to save remodeled photographs to file for later reuse

Do you could have any questions on picture information augmentation or this submit? Ask your questions within the feedback, and I’ll do my finest to reply.