Think about the booming chords from a pipe organ echoing by the cavernous sanctuary of an enormous, stone cathedral.

The sound a cathedral-goer will hear is affected by many elements, together with the situation of the organ, the place the listener is standing, whether or not any columns, pews, or different obstacles stand between them, what the partitions are fabricated from, the places of home windows or doorways, and so on. Listening to a sound can assist somebody envision their surroundings.

Researchers at MIT and the MIT-IBM Watson AI Lab are exploring the usage of spatial acoustic info to assist machines higher envision their environments, too. They developed a machine-learning mannequin that may seize how any sound in a room will propagate by the house, enabling the mannequin to simulate what a listener would hear at completely different places.

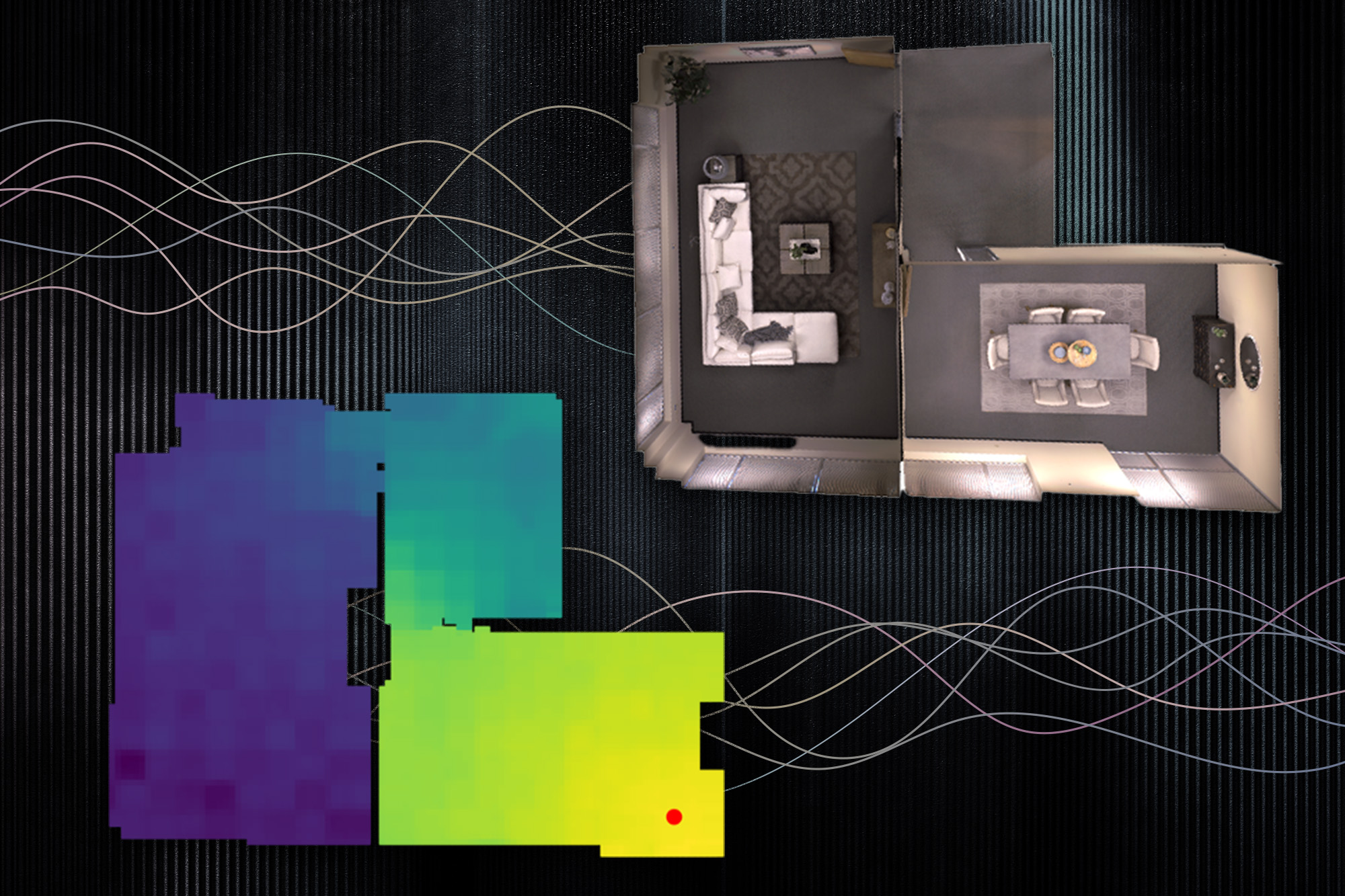

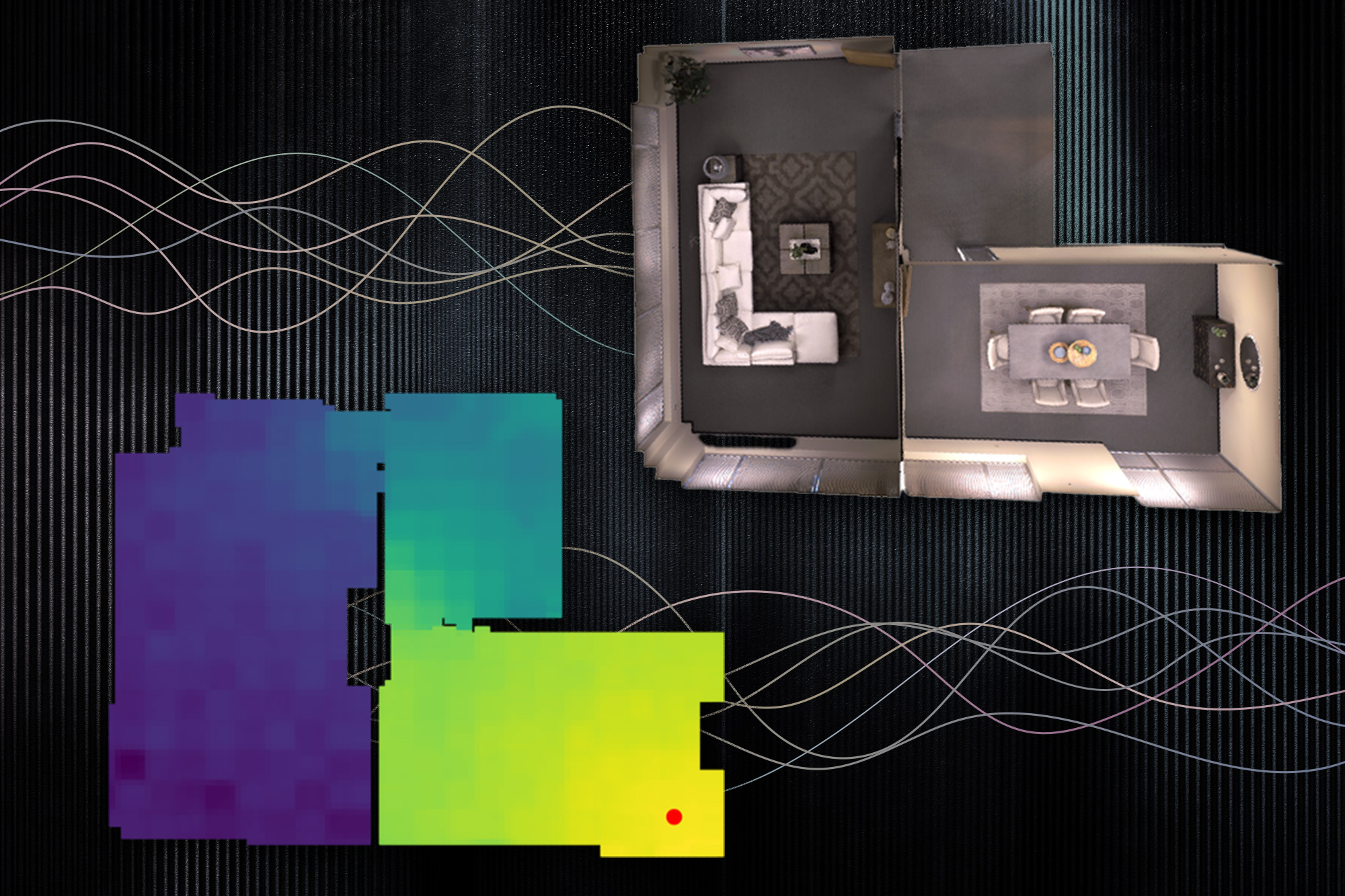

By precisely modeling the acoustics of a scene, the system can be taught the underlying 3D geometry of a room from sound recordings. The researchers can use the acoustic info their system captures to construct correct visible renderings of a room, equally to how people use sound when estimating the properties of their bodily surroundings.

Along with its potential purposes in digital and augmented actuality, this system may assist artificial-intelligence brokers develop higher understandings of the world round them. As an illustration, by modeling the acoustic properties of the sound in its surroundings, an underwater exploration robotic may sense issues which might be farther away than it may with imaginative and prescient alone, says Yilun Du, a grad pupil within the Division of Electrical Engineering and Laptop Science (EECS) and co-author of a paper describing the mannequin.

“Most researchers have solely targeted on modeling imaginative and prescient up to now. However as people, we have now multimodal notion. Not solely is imaginative and prescient vital, sound can be vital. I believe this work opens up an thrilling analysis route on higher using sound to mannequin the world,” Du says.

Becoming a member of Du on the paper are lead writer Andrew Luo, a grad pupil at Carnegie Mellon College (CMU); Michael J. Tarr, the Kavčić-Moura Professor of Cognitive and Mind Science at CMU; and senior authors Joshua B. Tenenbaum, professor in MIT’s Division of Mind and Cognitive Sciences and a member of the Laptop Science and Synthetic Intelligence Laboratory (CSAIL); Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Laptop Science and a member of CSAIL; and Chuang Gan, a principal analysis employees member on the MIT-IBM Watson AI Lab. The analysis can be offered on the Convention on Neural Info Processing Techniques.

Sound and imaginative and prescient

In laptop imaginative and prescient analysis, a kind of machine-learning mannequin referred to as an implicit neural illustration mannequin has been used to generate easy, steady reconstructions of 3D scenes from pictures. These fashions make the most of neural networks, which comprise layers of interconnected nodes, or neurons, that course of knowledge to finish a activity.

The MIT researchers employed the identical kind of mannequin to seize how sound travels constantly by a scene.

However they discovered that imaginative and prescient fashions profit from a property referred to as photometric consistency which doesn’t apply to sound. If one appears to be like on the identical object from two completely different places, the item appears to be like roughly the identical. However with sound, change places and the sound one hears may very well be utterly completely different as a consequence of obstacles, distance, and so on. This makes predicting audio very tough.

The researchers overcame this downside by incorporating two properties of acoustics into their mannequin: the reciprocal nature of sound and the affect of native geometric options.

Sound is reciprocal, which implies that if the supply of a sound and a listener swap positions, what the particular person hears is unchanged. Moreover, what one hears in a specific space is closely influenced by native options, similar to an impediment between the listener and the supply of the sound.

To include these two elements into their mannequin, referred to as a neural acoustic area (NAF), they increase the neural community with a grid that captures objects and architectural options within the scene, like doorways or partitions. The mannequin randomly samples factors on that grid to be taught the options at particular places.

“When you think about standing close to a doorway, what most strongly impacts what you hear is the presence of that doorway, not essentially geometric options far-off from you on the opposite aspect of the room. We discovered this info permits higher generalization than a easy totally linked community,” Luo says.

From predicting sounds to visualizing scenes

Researchers can feed the NAF visible details about a scene and some spectrograms that present what a bit of audio would sound like when the emitter and listener are positioned at goal places across the room. Then the mannequin predicts what that audio would sound like if the listener strikes to any level within the scene.

The NAF outputs an impulse response, which captures how a sound ought to change because it propagates by the scene. The researchers then apply this impulse response to completely different sounds to listen to how these sounds ought to change as an individual walks by a room.

As an illustration, if a track is taking part in from a speaker within the middle of a room, their mannequin would present how that sound will get louder as an individual approaches the speaker after which turns into muffled as they stroll out into an adjoining hallway.

When the researchers in contrast their method to different strategies that mannequin acoustic info, it generated extra correct sound fashions in each case. And since it discovered native geometric info, their mannequin was capable of generalize to new places in a scene a lot better than different strategies.

Furthermore, they discovered that making use of the acoustic info their mannequin learns to a pc vison mannequin can result in a greater visible reconstruction of the scene.

“While you solely have a sparse set of views, utilizing these acoustic options allows you to seize boundaries extra sharply, for example. And perhaps it is because to precisely render the acoustics of a scene, you must seize the underlying 3D geometry of that scene,” Du says.

The researchers plan to proceed enhancing the mannequin so it will probably generalize to model new scenes. Additionally they need to apply this system to extra complicated impulse responses and bigger scenes, similar to complete buildings or perhaps a city or metropolis.

“This new method may open up new alternatives to create a multimodal immersive expertise within the metaverse utility,” provides Gan.

“My group has finished numerous work on utilizing machine-learning strategies to speed up acoustic simulation or mannequin the acoustics of real-world scenes. This paper by Chuang Gan and his co-authors is clearly a significant step ahead on this route,” says Dinesh Manocha, the Paul Chrisman Iribe Professor of Laptop Science and Electrical and Laptop Engineering on the College of Maryland, who was not concerned with this work. “Specifically, this paper introduces a pleasant implicit illustration that may seize how sound can propagate in real-world scenes by modeling it utilizing a linear time-invariant system. This work can have many purposes in AR/VR in addition to real-world scene understanding.”

This work is supported, partially, by the MIT-IBM Watson AI Lab and the Tianqiao and Chrissy Chen Institute.