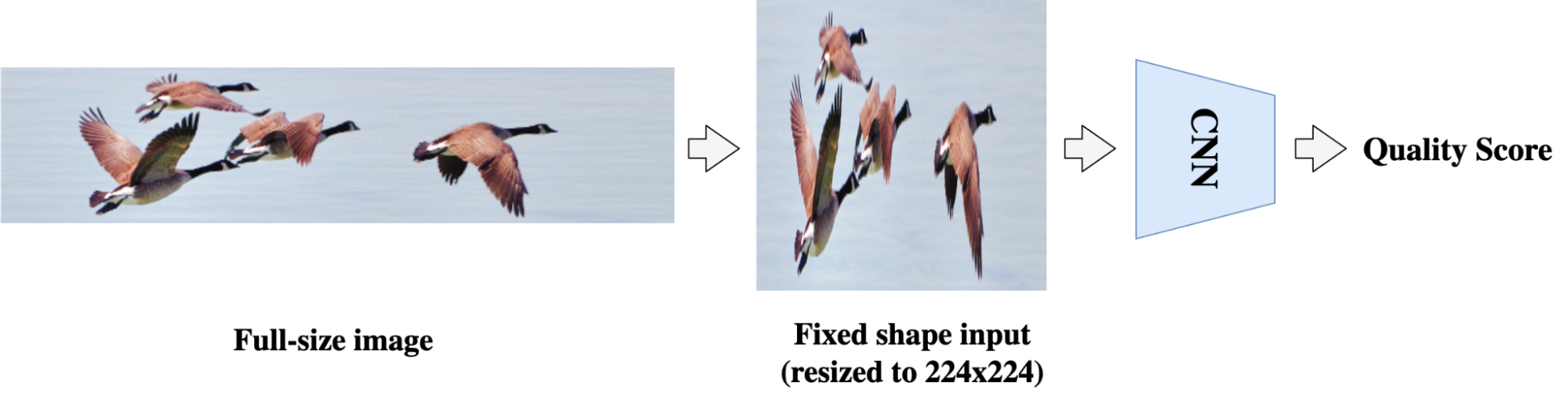

Understanding the aesthetic and technical high quality of pictures is vital for offering a greater person visible expertise. Picture high quality evaluation (IQA) makes use of fashions to construct a bridge between a picture and a person’s subjective notion of its high quality. Within the deep studying period, many IQA approaches, equivalent to NIMA, have achieved success by leveraging the facility of convolutional neural networks (CNNs). Nevertheless, CNN-based IQA fashions are sometimes constrained by the fixed-size enter requirement in batch coaching, i.e., the enter pictures must be resized or cropped to a hard and fast measurement form. This preprocessing is problematic for IQA as a result of pictures can have very completely different facet ratios and resolutions. Resizing and cropping can affect picture composition or introduce distortions, thus altering the standard of the picture.

|

| In CNN-based fashions, pictures must be resized or cropped to a hard and fast form for batch coaching. Nevertheless, such preprocessing can alter the picture facet ratio and composition, thus impacting picture high quality. Authentic picture used below CC BY 2.0 license. |

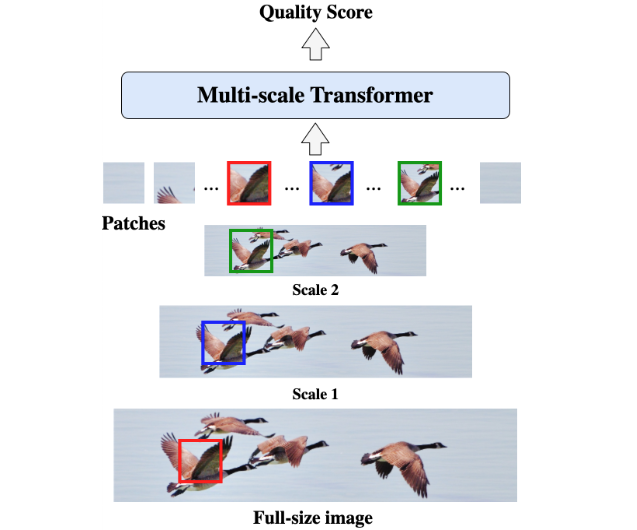

In “MUSIQ: Multi-scale Picture High quality Transformer”, revealed at ICCV 2021, we suggest a patch-based multi-scale picture high quality transformer (MUSIQ) to bypass the CNN constraints on fastened enter measurement and predict the picture high quality successfully on native-resolution pictures. The MUSIQ mannequin helps the processing of full-size picture inputs with various facet ratios and resolutions and permits multi-scale characteristic extraction to seize picture high quality at completely different granularities. To assist positional encoding within the multi-scale illustration, we suggest a novel hash-based 2D spatial embedding mixed with an embedding that captures the picture scaling. We apply MUSIQ on 4 large-scale IQA datasets, demonstrating constant state-of-the-art outcomes throughout three technical high quality datasets (PaQ-2-PiQ, KonIQ-10k, and SPAQ) and comparable efficiency to that of state-of-the-art fashions on the aesthetic high quality dataset AVA.

|

| The patch-based MUSIQ mannequin can course of the full-size picture and extract multi-scale options, which higher aligns with an individual’s typical visible response. |

Within the following determine, we present a pattern of pictures, their MUSIQ rating, and their imply opinion rating (MOS) from a number of human raters within the brackets. The vary of the rating is from 0 to 100, with 100 being the best perceived high quality. As we will see from the determine, MUSIQ predicts excessive scores for pictures with excessive aesthetic high quality and excessive technical high quality, and it predicts low scores for pictures that aren’t aesthetically pleasing (low aesthetic high quality) or that include seen distortions (low technical high quality).

| Predicted MUSIQ rating (and floor reality) on pictures from the KonIQ-10k dataset. High: MUSIQ predicts excessive scores for top of the range pictures. Center: MUSIQ predicts low scores for pictures with low aesthetic high quality, equivalent to pictures with poor composition or lighting. Backside: MUSIQ predicts low scores for pictures with low technical high quality, equivalent to pictures with seen distortion artifacts (e.g., blurry, noisy). |

The Multi-scale Picture High quality Transformer

MUSIQ tackles the problem of studying IQA on full-size pictures. In contrast to CNN-models which are usually constrained to fastened decision, MUSIQ can deal with inputs with arbitrary facet ratios and resolutions.

To perform this, we first make a multi-scale illustration of the enter picture, containing the native decision picture and its resized variants. To protect the picture composition, we keep its facet ratio throughout resizing. After acquiring the pyramid of pictures, we then partition the photographs at completely different scales into fixed-size patches which are fed into the mannequin.

|

| Illustration of the multi-scale picture illustration in MUSIQ. |

Since patches are from pictures of various resolutions, we have to successfully encode the multi-aspect-ratio multi-scale enter right into a sequence of tokens, capturing each the pixel, spatial, and scale info. To realize this, we design three encoding elements in MUSIQ, together with: 1) a patch encoding module to encode patches extracted from the multi-scale illustration; 2) a novel hash-based spatial embedding module to encode the 2D spatial place for every patch; and three) a learnable scale embedding to encode completely different scales. On this method, we will successfully encode the multi-scale enter as a sequence of tokens, serving because the enter to the Transformer encoder.

To foretell the ultimate picture high quality rating, we use the usual strategy of prepending an extra learnable “classification token” (CLS). The CLS token state on the output of the Transformer encoder serves as the ultimate picture illustration. We then add a completely related layer on high to foretell the IQS. The determine beneath supplies an outline of the MUSIQ mannequin.

Since MUSIQ solely modifications the enter encoding, it’s appropriate with any Transformer variants. To display the effectiveness of the proposed technique, in our experiments we use the basic Transformer with a comparatively light-weight setting in order that the mannequin measurement is corresponding to ResNet-50.

Benchmark and Analysis

To guage MUSIQ, we run experiments on a number of large-scale IQA datasets. On every dataset, we report the Spearman’s rank correlation coefficient (SRCC) and Pearson linear correlation coefficient (PLCC) between our mannequin prediction and the human evaluators’ imply opinion rating. SRCC and PLCC are correlation metrics starting from -1 to 1. Larger PLCC and SRCC means higher alignment between mannequin prediction and human analysis. The graph beneath exhibits that MUSIQ outperforms different strategies on PaQ-2-PiQ, KonIQ-10k, and SPAQ.

Notably, the PaQ-2-PiQ take a look at set is fully composed of enormous footage having no less than one dimension exceeding 640 pixels. That is very difficult for conventional deep studying approaches, which require resizing. MUSIQ can outperform earlier strategies by a big margin on the full-size take a look at set, which verifies its robustness and effectiveness.

It is usually price mentioning that earlier CNN-based strategies usually required sampling as many as 20 crops for every picture throughout testing. This sort of multi-crop ensemble is a technique to mitigate the fastened form constraint within the CNN fashions. However since every crop is barely a sub-view of the entire picture, the ensemble continues to be an approximate strategy. Furthermore, CNN-based strategies each add further inference price for each crop and, as a result of they pattern completely different crops, they will introduce randomness within the end result. In distinction, as a result of MUSIQ takes the full-size picture as enter, it may well instantly study one of the best aggregation of knowledge throughout the complete picture and it solely must run the inference as soon as.

To additional confirm that the MUSIQ mannequin captures completely different info at completely different scales, we visualize the eye weights on every picture at completely different scales.

|

| Consideration visualization from the output tokens to the multi-scale illustration, together with the unique decision picture and two proportionally resized pictures. Brighter areas point out larger consideration, which signifies that these areas are extra vital for the mannequin output. Pictures for illustration are taken from the AVA dataset. |

We observe that MUSIQ tends to concentrate on extra detailed areas within the full, high-resolution pictures and on extra international areas on the resized ones. For instance, for the flower photograph above, the mannequin’s consideration on the unique picture is specializing in the pedal particulars, and the eye shifts to the buds at decrease resolutions. This exhibits that the mannequin learns to seize picture high quality at completely different granularities.

Conclusion

We suggest a multi-scale picture high quality transformer (MUSIQ), which might deal with full-size picture enter with various resolutions and facet ratios. By reworking the enter picture to a multi-scale illustration with each international and native views, the mannequin can seize the picture high quality at completely different granularities. Though MUSIQ is designed for IQA, it may be utilized to different situations the place job labels are delicate to picture decision and facet ratio. The MUSIQ mannequin and checkpoints can be found at our GitHub repository.

Acknowledgements

This work is made doable by means of a collaboration spanning a number of groups throughout Google. We’d prefer to acknowledge contributions from Qifei Wang, Yilin Wang and Peyman Milanfar.